HPSP131 - Simulations

This page contains extra R content not covered in the demonstrations and could be considered supplementary to the module. This content is useful for completing the advanced exercises from Week 8 and covers simulating data in R. In particular, we will conduct a power analysis via simulation.

Simulating a data.frame via a Custom Function

If you’re not familiar with creating custom functions in R, see the extra content page on custom functions. In order to simulate data, we will be creating a custom function that creates a data.frame based on parameters we can set in the function. We are going to recreate the data.frame from Demonstration 5 where we tested the hypothesis that cat-people are more introverted than dog-people. Before we can do that though, we must introduce some new functions:

sample()

This function randomly samples options from a vector. This function

is ideal for randomly sampling a categorical variable. For instance,

below we randomly sample 40 objects who are either in the ‘cat’ group or

the ‘dog’ group. To see more about how this function works, read

help(sample).

sample(c("cat","dog"),40,replace = TRUE)## [1] "cat" "cat" "cat" "dog" "cat" "cat" "dog" "cat" "dog" "cat" "dog" "dog" "cat" "dog" "cat" "cat"

## [17] "dog" "cat" "cat" "dog" "dog" "cat" "dog" "cat" "cat" "cat" "dog" "dog" "dog" "cat" "dog" "dog"

## [33] "cat" "dog" "dog" "cat" "dog" "dog" "cat" "dog"rnorm()

This function creates a random continuous variable that is normally

distributed. This function is ideal for sampling a continuous variable

with a normal distribution. In the example below, we create a random

normal distribution with a mean of 0 and a standard deviation of 1. To

see more about how this function works, read

help(rnorm).

rnorm(40,mean = 0,sd = 1)## [1] -1.06962793 0.11919591 1.06325664 0.87536529 0.76099596 0.40385565 0.29132465 -1.66033441

## [9] -0.05110411 -0.35364587 -0.50750913 0.70305677 -0.15099559 -0.13777169 0.76983672 2.23700001

## [17] -0.49131583 1.22692821 -0.97652513 -0.33085256 0.45377296 -1.39321219 0.10669870 0.59754400

## [25] 0.16278261 0.35267761 -0.39535554 1.06631437 -0.43415359 -1.23733216 -1.99229283 1.60433422

## [33] 0.15579134 0.93373498 -1.12575813 -0.52367428 0.48463727 -0.58934226 0.21954879 0.07842032We now have the tools to create our simulate data function, which we

will save as create_dataset. For now, it will take one

argument, n, which specifies the number of participants in the

data.frame.

We will set the mean and sd of the variable ‘introvert’ to 21.00 and 3.00 respectively, which is close enough to what we found in the class dataset.

create_dataset <- function(n){

result <- data.frame(group = sample(c("cat","dog"),n,replace = TRUE),

introvert = rnorm(n,mean = 21,sd = 3))

return(result)

}Let’s test it out:

create_dataset(20)## group introvert

## 1 cat 22.54773

## 2 cat 20.97479

## 3 dog 22.86437

## 4 dog 21.94395

## 5 dog 27.14935

## 6 dog 21.58293

## 7 dog 20.57322

## 8 cat 21.55488

## 9 dog 19.84071

## 10 dog 21.03762

## 11 dog 21.04624

## 12 cat 20.19619

## 13 cat 21.29944

## 14 dog 19.08225

## 15 cat 17.90070

## 16 cat 26.54668

## 17 dog 24.75076

## 18 dog 26.45222

## 19 cat 20.70169

## 20 cat 24.05693As you can see above, our function is creating a data.frame with 20

participants, and their scores on the group and

introvert variables are randomly determined from the

sample() and rnorm() functions

respectively.

Conduct a statistical test with simulated data.

We can do anything with this new simulated data.frame as if it were a data based on real observations. For instance, we could conduct an independent-samples t-test. Remember, the variables we created are completely random, so we would expect a non-significant result here.

t.test(introvert ~ group,create_dataset(20))##

## Welch Two Sample t-test

##

## data: introvert by group

## t = -1.3859, df = 13.123, p-value = 0.1889

## alternative hypothesis: true difference in means between group cat and group dog is not equal to 0

## 95 percent confidence interval:

## -4.633219 1.009738

## sample estimates:

## mean in group cat mean in group dog

## 20.59677 22.40851Let’s expand the function above. How about creating a function that

simulates a dataset, then runs a t-test, and then returns the results of

that test? We can do this by including the function that runs a t.test

within the function we wrote above. Note that we use the

tidy() function from the broom package to

easily access the results from the t-test.

simulate_test <- function(n){

result <- data.frame(group = sample(c("cat","dog"),n,replace = TRUE),

introvert = rnorm(n,mean = m.introvert,sd = sd.introvert))

test <- t.test(introvert ~ group, data = result) %>%

tidy()

return(test$estimate)

}simulate_test(20)## [1] -1.634167Let’s go another step further and say we want to run a t-tests on

simulated data 1000 times. Doing so will give us a distribution of

estimates given a certain statistical test and sample size. We can do

this by using the replicate() function. This function

expects two arguments, the first is the number of times we want to

conduct a function, the second is the function itself. In the case

below, the output is saved as a vector.

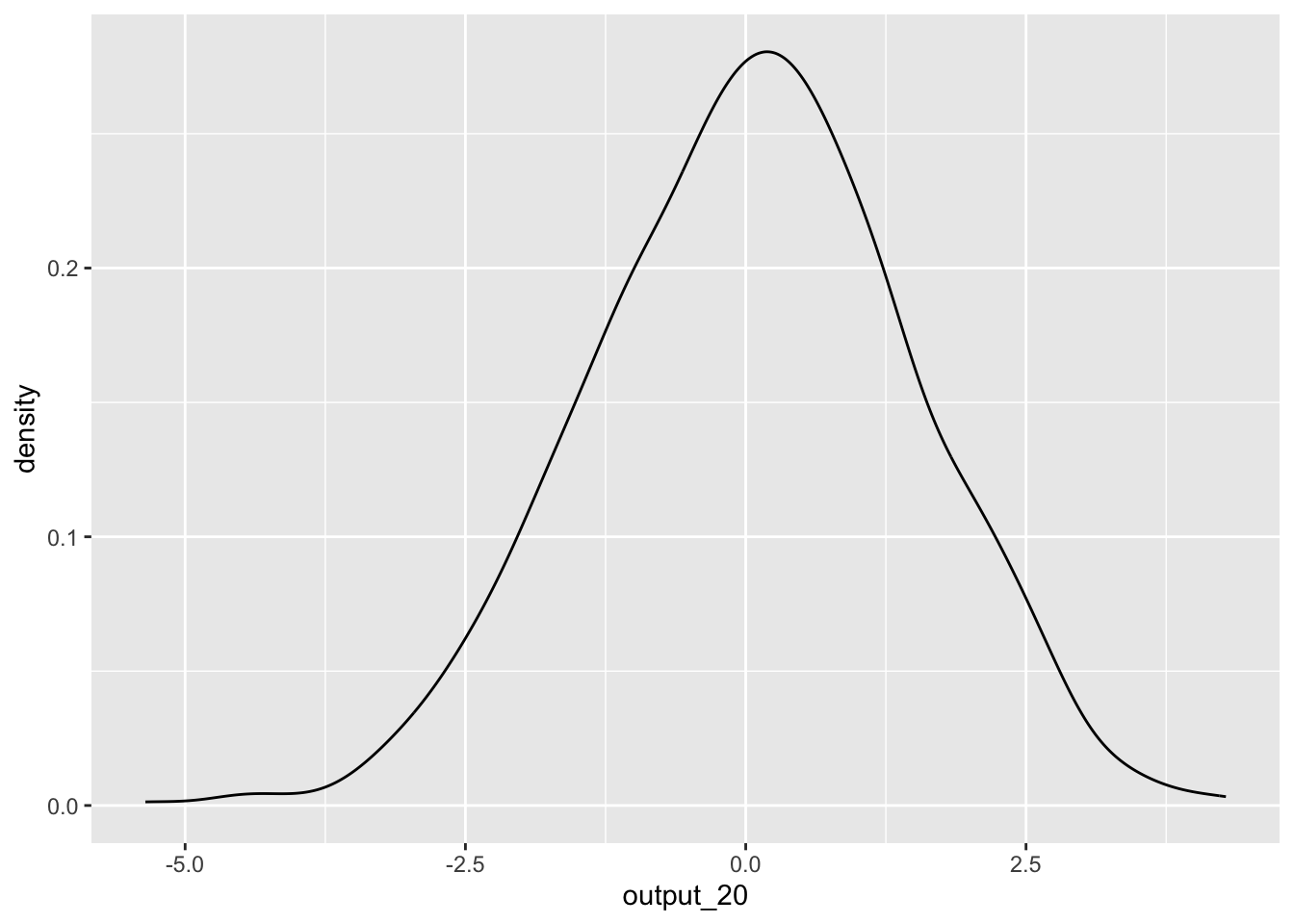

output_20 <- replicate(1000,simulate_test(20))To see the distribution of estimates, we can plot these values:

ggplot() +

geom_density(aes(output_20))

But hang on! We know there is no effect as the two variables in the t-test are completely random. So how come we are getting such a wide-range of t-statistics? This demonstrates one of the core issues with statistical tests. A sample is only a portion of the entire population, and your estimate can vary greatly depending on the slice of the population that happens to make it into your sample.

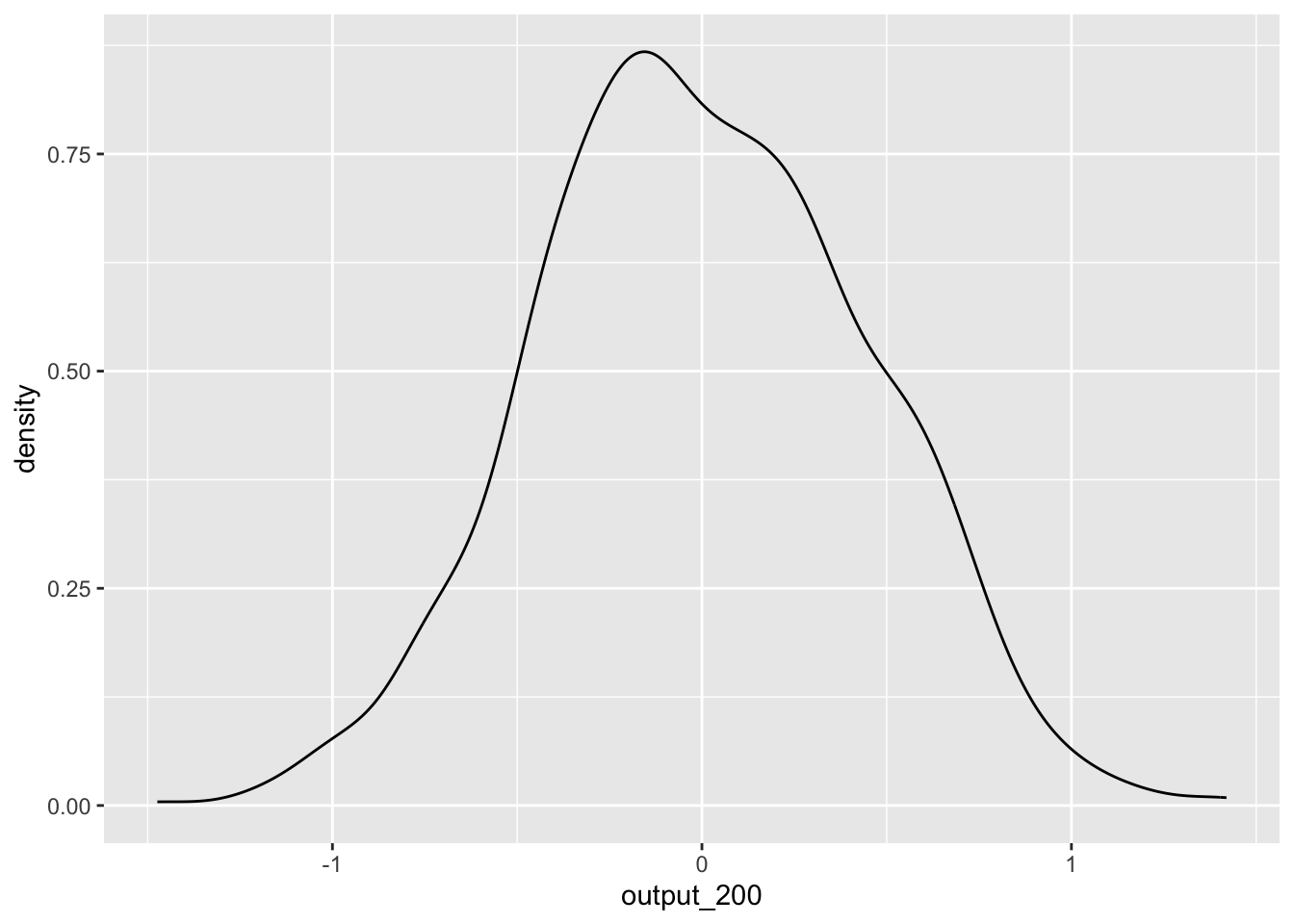

What if we increase the number of participants in our simulated dataset to 200 participants?

output_200 <- replicate(1000,simulate_test(200))ggplot() +

geom_density(aes(output_200))

We see that, while we still get a range of estimates, they are much less extreme. This is because with a larger sample size, the ‘slice’ that is our sample is more likely to be representative of our population, and therefore the t-statistic is more likely to be around the correct value. Using this knowledge, we can construct a power analysis to determine the minimum number of participants needed to accurately detect an effect.

Power analysis using simulations

Above, we replicated a data.frame where we do not expect to find a significant effect (because the variables are completely random and unrelated from each other). However, what if we expect an association? Using the same technique, we can run a power analysis to determine whether a certain research design and analysis plan has the ability to detect an effect given different sample sizes.

As covered in the lectures, power is the ability to detect an effect if it is there. Generally, things that influence power are effect size, alpha level (significance level), and number of observations.

In the code below, we again create a function, but include an additional argument for effect size (in the form of a mean difference between the ‘cat’ and ‘dog’ groups). Also, instead of returning the estimate, we will return the p-value.

simulate_test2 <- function(n,mean.diff){

result <- data.frame(group = sample(c("cat","dog"),n,replace = TRUE),

introvert = rnorm(n,mean = m.introvert,sd = sd.introvert)) %>%

mutate(mean.diff = mean.diff) %>%

mutate(introvert = ifelse(group == "cat",introvert - mean.diff/2,introvert + mean.diff/2))

test <- t.test(introvert ~ group, data = result) %>%

tidy()

return(test$p.value)

}Then, we can calculate the proportion of times we find a significant effect when alpha = .05. Let’s say that we expect a mean difference on of 2 on introversion between cat-people and dog-people.

cat.dog_difference <- 2Remember, using logical operators results in a logical variable,

which is coded as TRUE = 1 and FALSE = 0. Therefore, we can use the

mean() function to get the proportion of times out of the

200 simulations we correctly found a significant effect.

sim.results <- replicate(200,simulate_test2(20,cat.dog_difference))

power <- mean(sim.results < .05)

power## [1] 0.195The convention is that the analysis is sufficiently powered if it can detect an effect that is present 80% of the time. The power calculated for a sample size of 20 was 19.5%, which falls quite short of this threshold. What if we increased our sample size?

sim.results <- replicate(200,simulate_test2(240,cat.dog_difference))

power <- mean(sim.results < .05)

power## [1] 1By running the above simulations at different sample sizes, we will

be able to determine the sample sizes needed to detect this effect at

80% power. Below is code to automatically run the

simulate_test2() function at different sample sizes and

save the results in a data.frame. There are some complicated things

going on here, but see if you can make sense of it. You may need to look

up what certain functions do.

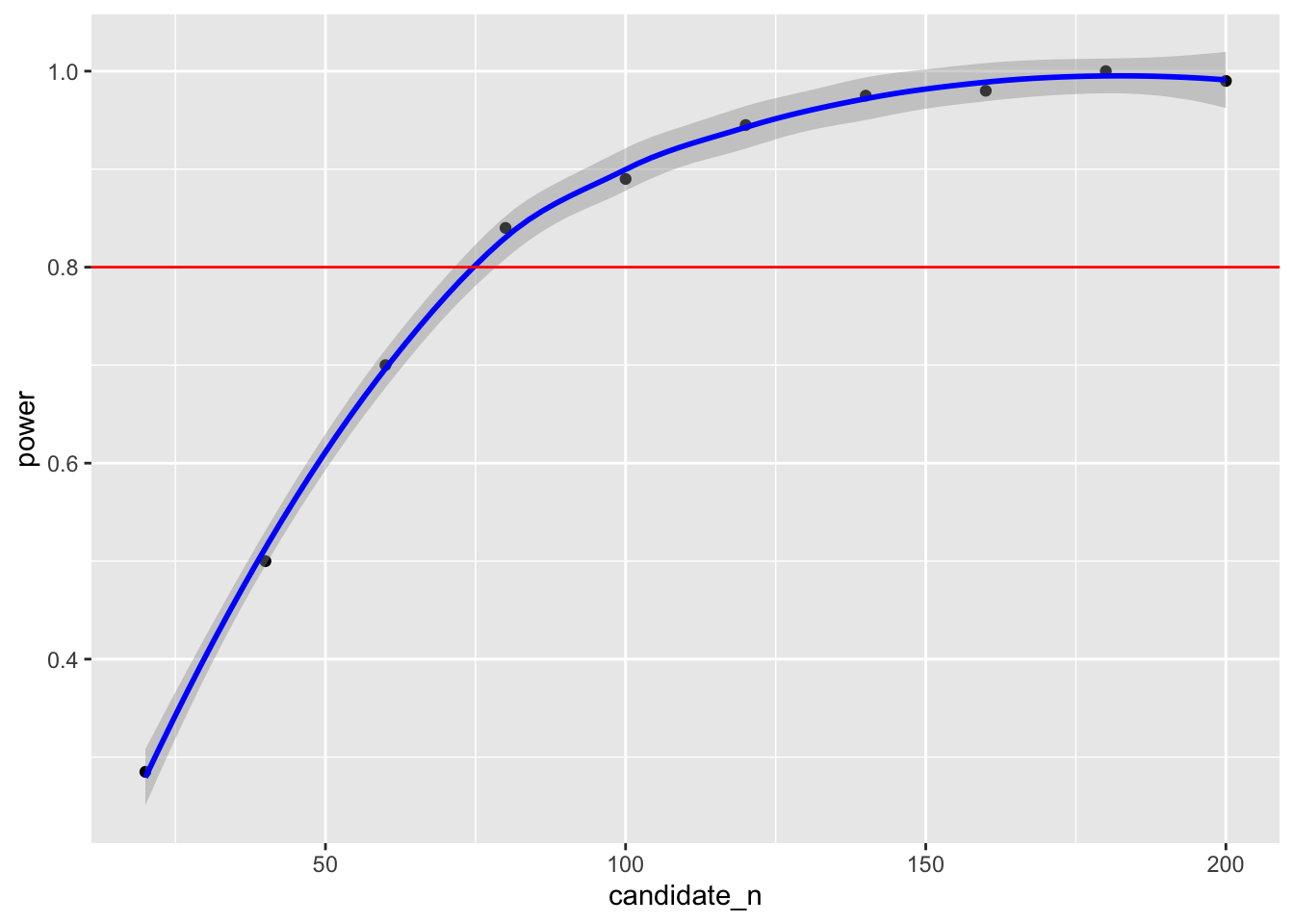

power.grid <- data.frame(candidate_n = rep(seq(20,200,by = 20),each = 200)) %>%

mutate(power = vapply(candidate_n,simulate_test2,FUN.VALUE = 1,mean.diff = cat.dog_difference)) %>%

group_by(candidate_n) %>%

summarise(power = mean(power < .05))power.grid## # A tibble: 10 × 2

## candidate_n power

## <dbl> <dbl>

## 1 20 0.195

## 2 40 0.42

## 3 60 0.585

## 4 80 0.7

## 5 100 0.84

## 6 120 0.875

## 7 140 0.91

## 8 160 0.95

## 9 180 0.965

## 10 200 0.965This suggests that to detect a mean difference of 2 on introversion between cat-people and dog-people with our data 80% of the time, we would require a sample size between 80 and 100 to be confident in our result. We can also plot the simulated power analyses to help determine the sample size required.

ggplot(data = power.grid,aes(x = candidate_n,y = power)) +

geom_point() +

geom_smooth(colour = "blue") +

geom_hline(yintercept = .80,colour = "red")

Compare the power estimate above with the one using the

pwr.t.test() function below. Note: the simulation above

calculates total N, so you will need to divide it by two to get the

sample size for each group.

library(pwr)

#First we must calculate effect size (Cohen's D)

D <- cat.dog_difference/sqrt((sd.introvert^2 + sd.introvert^2)/2)

D## [1] 0.5657714#We then put this effect size into the pwr.t.test() function.

pwr.t.test(d = D,power = .8,sig.level = .05,type = "two.sample")##

## Two-sample t test power calculation

##

## n = 50.01921

## d = 0.5657714

## sig.level = 0.05

## power = 0.8

## alternative = two.sided

##

## NOTE: n is number in *each* groupAdvanced Exercises

If you would like to practice the skills on this page, weekly exercise questions on this content are available in the advanced exercises for Week 8. For HPSP131 students, you can access the weekly exercises through the module Canvas page.