Content

These are the packages we will be using in this demonstration. If

you’ve been following the demonstrations sequentially, you should be

familiar with the tidyverse and lm.beta

packages. The pwr and pwrss packages are used

to conduct standard power analyses for common statistical tests, while

the psych package has many useful functions; however, we

will just be using it to run a mediation analysis via bootstrapping. As

always, for the packages that you are encountering for the first time,

make sure to install them onto your computer first.

library(pwr)

library(pwrss)

library(tidyverse)

library(effectsize)

library(lm.beta)

library(psych)pwr Package

For most standard analyses, such as an independent-samples t-test,

the easiest way to conduct power analyses is by using functions in the

pwr package. Functions from the pwr package

work by specifying three of four bits of information that influence

statistical power: the expected effect size, power threshold, and

significance level (alpha), and the sample size. The function will then

return whichever of the four parts was not specified. Typically, power

analyses are used to calculate the sample size required before a study

is conducted; therefore, this is what we will be focusing on here.

We will cover four examples: a power analysis for a correlation, an independent-samples t-test, a one-way ANOVA, and a multiple regression.

As mentioned above, these functions expect an effect size measure,

and each pwr function (annoyingly) uses a different effect size measure

(e.g., r, Cohen’s d, Cohen’s f, etc.). You can view all the effect sizes

used by these functions and their standard interpretation using the

cohen.ES() function. To use this function, you simply need

to state which effect size measure you’re interested in, and the size

that you expect (either small, medium, or big). Here’s an example:

cohen.ES(test = "f2",size = "medium")##

## Conventional effect size from Cohen (1982)

##

## test = f2

## size = medium

## effect.size = 0.15pwr and correlations

To conduct a power analysis for a correlation, we can use the

pwr.r.test() function. This function expects three of four

arguments:

- n = Sample Size

- r = Expected Effect size

- sig.level = Significance Level

- power = Power threshold

As discussed in the lecture, things like the significance level and power threshold are already chosen for you. Conventionally, the significance level is set to .05, while the power threshold is .80 (80%). Therefore, you only need to decide the expected effect size to calculate the required sample size.

Recall from the lecture series the following interpretation:

| Effect size | r |

|---|---|

| Small | .10 |

| Medium | .30 |

| Large | .50 |

Therefore, if we needed to calculate the required sample size for a correlation where you expect a medium effect, you could use the following code:

pwr.r.test(r = .30,sig.level = .05,power = .80)##

## approximate correlation power calculation (arctangh transformation)

##

## n = 84.07364

## r = 0.3

## sig.level = 0.05

## power = 0.8

## alternative = two.sidedThis would indicate that we would require a sample of 85 participants (rounded-up) to detect a medium effect. By playing around with the expected effect size, we can see how this impacts the required number of participants. For instance, if we expect a small effect, we will need a much larger sample size:

pwr.r.test(r = .10,sig.level = .05,power = .80)##

## approximate correlation power calculation (arctangh transformation)

##

## n = 781.7516

## r = 0.1

## sig.level = 0.05

## power = 0.8

## alternative = two.sidedpwr and t.tests

Similarly, we can use pwr.t.test() to conduct a power

analysis for an independent-samples t-test. However, one thing to note

is that the expected effect size for this function is a Cohen’s

d. Therefore, we can use the following conventions:

| Effect size | d |

|---|---|

| Small | .20 |

| Medium | .50 |

| Large | .80 |

So, if we expect a medium effect, the code would be:

pwr.t.test(d = .50,sig.level = .05,power = .80)##

## Two-sample t test power calculation

##

## n = 63.76561

## d = 0.5

## sig.level = 0.05

## power = 0.8

## alternative = two.sided

##

## NOTE: n is number in *each* groupNote that the expected effect size is the minimum for each group. Therefore, you would need a total sample of 128 participants (rounded-up).

We can also use the same function to run a power analysis for a

paired-samples t-test. To do this, we need to set the type

argument to equal “paired”. In the code below, we see for a

paired-sample t-test, we require 90 participants (rounding-up) to detect

a medium effect (Cohen’s d = .30) with 80% power. Note that the n is for

the number of pairs, so 90 is the total number of participants

needed.

pwr.t.test(d = .30,sig.level = .05,power = .80,type = "paired")##

## Paired t test power calculation

##

## n = 89.14938

## d = 0.3

## sig.level = 0.05

## power = 0.8

## alternative = two.sided

##

## NOTE: n is number of *pairs*pwr and ANOVAs

For a one-way ANOVA, we can use the pwr.anova.test()

function. This analysis assumes there will be equal numbers in each

group. Again, this function uses another measure of effect size, Cohen’s

f. Typically, in psychology we use eta-squared rather than Cohen’s f as

an effect size measure for an ANOVA. However, you can easily convert

eta-squared values to Cohen’s f using the eta2_to_f()

function from the effectsize package, like below:

eta2_to_f(.14)## [1] 0.4034733Here are the conventional interpretations for both effect size measures:

| Effect size | f | eta-squared |

|---|---|---|

| Small | .10 | .01 |

| Medium | .25 | .06 |

| Large | .40 | .14 |

This function also requires an additional parameter k,

which is the number of levels (or groups) in the IV. So, if you were

conducting a one-way ANOVA with three groups in the IV, and expect a

medium effect, the code would look like this:

pwr.anova.test(k = 3,f = .25,sig.level = .05,power = .80)##

## Balanced one-way analysis of variance power calculation

##

## k = 3

## n = 52.3966

## f = 0.25

## sig.level = 0.05

## power = 0.8

##

## NOTE: n is number in each groupAgain, the value provided for n is for each group, so you would need a total of 159 participants (rounded-up).

pwr and multiple regressions

For a power analysis for a multiple regression, we use

pwrss.f.reg() - this function comes from a separate

package, pwrss. Here, for the effect size measure, you can

use either the R-square (or the coefficient of determination) or a new

effect size measure called Cohen’s f-squared. Much like you can convert

Cohen’s f and eta-squared above, you can convert Cohen’s f-squared to

R-squared using the f2_to_r2() function from the

effectsize package.

Note that this power analysis is for the overall model - to conduct a power analysis for the effect of one predictor in the model, you would need to use the difference in R-square (i.e., how much variance does your predictor explain above all the other covariates). See the table below for standard interpretations:

| Effect size | f2 | R-square |

|---|---|---|

| Small | .02 | .02 |

| Medium | .15 | .13 |

| Large | .35 | .26 |

For the pwrss.f.reg() function, you need to also specify

the number of predictors in the model as the argument k.

Note, if you have an interaction term in your analysis, this counts as

an additional predictor. As an example, if you were conducting a

multiple regression with 4 predictors and expect a medium effect, the

code for the power analysis would look like:

pwrss.f.reg(k = 4,f2 = .15,alpha = .05,power = .80)## Linear Regression (F test)

## R-squared Deviation from 0 (zero)

## H0: r2 = 0

## HA: r2 > 0

## ------------------------------

## Statistical power = 0.8

## n = 85

## ------------------------------

## Numerator degrees of freedom = 4

## Denominator degrees of freedom = 79.45

## Non-centrality parameter = 12.667

## Type I error rate = 0.05

## Type II error rate = 0.2Or…

pwrss.f.reg(k = 4,r2 = .13,alpha = .05,power = .80)## Linear Regression (F test)

## R-squared Deviation from 0 (zero)

## H0: r2 = 0

## HA: r2 > 0

## ------------------------------

## Statistical power = 0.8

## n = 85

## ------------------------------

## Numerator degrees of freedom = 4

## Denominator degrees of freedom = 79.755

## Non-centrality parameter = 12.665

## Type I error rate = 0.05

## Type II error rate = 0.2Therefore, you would need at least 85 participants for this study.

Power Analyses via Simulations.

Above, we cover functions that allow you to conduct power analyses for common statistical techniques used in psychological research. However, if you are dealing with a more complicated design/statistical test, then these easy-to-use functions become less helpful. One way to conduct a power analysis is via simulation. For more information on this, see this extra content page.

Mediation

As covered in the lecture series, mediation describes a relationship where the influence of one variable on another can be explained through a third variable. In the example below, we will test whether the relationship between attitudes towards exercise and exercise behaviour can be explained through intentions to exercise (i.e., individuals who have positive attitudes about exercise increase their intention to exercise, which in turn increases exercise behaviour). For more information on these scales (and some of the ones we will use later), see this paper: https://search.proquest.com/docview/202682863. Note, we only use the first 5-items on each scale to keep things simple.

1. Clean data for analysis.

First, we must calculate the variables that we need for our analysis. This process should be fairly familiar by now.

data.clean <- data %>%

mutate(attitude = exercise.attitude.1 + exercise.attitude.2 + exercise.attitude.3 + exercise.attitude.4 + exercise.attitude.5,

intention = exercise.intention.1 + exercise.intention.2 + exercise.intention.3 + exercise.intention.4 + exercise.intention.5,

behaviour = exercise.behaviour.1 + exercise.behaviour.2 + exercise.behaviour.3 + exercise.behaviour.4 + exercise.behaviour.5) %>%

dplyr::select(student.no,attitude,intention,behaviour) %>%

drop_na(attitude) %>%

drop_na(intention) %>%

drop_na(behaviour)2. Run statistical test

Remember, mediation is when the effect of one IV could be explained through a third variable (mediation). If there is an effect in a model without the mediator, but that effect is reduced (or disappears) when the mediator is included, there is a chance the mediation is happening. In order to check whether our variables meet these conditions, we need to conduct a series of linear regressions.

Model 1

Here, we test whether there is an association between the predictor (attitudes) and the outcome variable (behaviour):

lm(behaviour ~ attitude,data = data.clean) %>%

lm.beta() %>%

summary()##

## Call:

## lm(formula = behaviour ~ attitude, data = data.clean)

##

## Residuals:

## Min 1Q Median 3Q Max

## -12.7448 -3.4044 -0.1905 2.8728 16.0022

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) -4.1703 NA 3.9480 -1.056 0.294

## attitude 0.6265 0.4319 0.1411 4.441 2.65e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.913 on 86 degrees of freedom

## Multiple R-squared: 0.1865, Adjusted R-squared: 0.1771

## F-statistic: 19.72 on 1 and 86 DF, p-value: 2.652e-05Model 2

Here, we test whether including the mediator (intention) in the model changes the relationship between the predictor (attitude) and the outcome variable (behaviour):

lm(behaviour ~ attitude + intention,data = data.clean) %>%

lm.beta() %>%

summary()##

## Call:

## lm(formula = behaviour ~ attitude + intention, data = data.clean)

##

## Residuals:

## Min 1Q Median 3Q Max

## -13.7829 -3.9093 -0.1369 3.1536 17.3018

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) -6.8713 NA 4.2603 -1.613 0.110480

## attitude 0.5550 0.3826 0.1468 3.781 0.000289 ***

## intention 0.1829 0.1621 0.1142 1.602 0.112830

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.86 on 85 degrees of freedom

## Multiple R-squared: 0.2104, Adjusted R-squared: 0.1918

## F-statistic: 11.32 on 2 and 85 DF, p-value: 4.371e-05Model 3

Also, in order for there to be a mediation, we must observe a relationship between the predictor (attitude) and the mediator (intention):

lm(intention ~ attitude,data = data.clean) %>%

lm.beta() %>%

summary()##

## Call:

## lm(formula = intention ~ attitude, data = data.clean)

##

## Residuals:

## Min 1Q Median 3Q Max

## -15.9329 -3.1942 0.5897 4.2626 10.1947

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) 14.7682 NA 3.6959 3.996 0.000136 ***

## attitude 0.3909 0.3041 0.1321 2.960 0.003973 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.535 on 86 degrees of freedom

## Multiple R-squared: 0.09247, Adjusted R-squared: 0.08191

## F-statistic: 8.762 on 1 and 86 DF, p-value: 0.003973Mediation Analysis

While the above is useful to see whether the conditions for a

mediation are met. In actuality, they don’t need to be done when

conducting/reporting a mediation analysis. We can conduct the actual

mediation analysis in R, which includes all the steps above, using the

aptly named mediate() function from the psych

package.

library(psych)Like all analysis functions, the mediate() function

accepts a formula and a data.frame, but also a couple of options that we

will want to change. For the formula, the mediate function takes a

specific form, where the mediator is put inside brackets on the

right-hand side of the ~ symbol:

DV ~ IV + (Mediator)So for our analysis, the code becomes the following. Note, we also

want to set the ‘std’ argument to TRUE to ensure we receive

standardised estimates, and the ‘plot’ argument to FALSE so

we are only seeing the numeric output (we will see the plot later).

model <- mediate(behaviour ~ attitude + (intention),data = data.clean,std = TRUE,plot = FALSE)

model##

## Mediation/Moderation Analysis

## Call: mediate(y = behaviour ~ attitude + (intention), data = data.clean,

## std = TRUE, plot = FALSE)

##

## The DV (Y) was behaviour . The IV (X) was attitude . The mediating variable(s) = intention .

##

## Total effect(c) of attitude on behaviour = 0.43 S.E. = 0.1 t = 4.44 df= 86 with p = 2.7e-05

## Direct effect (c') of attitude on behaviour removing intention = 0.38 S.E. = 0.1 t = 3.78 df= 85 with p = 0.00029

## Indirect effect (ab) of attitude on behaviour through intention = 0.05

## Mean bootstrapped indirect effect = 0.05 with standard error = 0.04 Lower CI = -0.02 Upper CI = 0.13

## R = 0.46 R2 = 0.21 F = 11.32 on 2 and 85 DF p-value: 2.54e-06

##

## To see the longer output, specify short = FALSE in the print statement or ask for the summaryMost of the information above is what we have encountered previously

(e.g., we are given the total and direct effects that could be

interpreted from the regressions above). The main information we are

interested in this output is the line on the mean bootstrapped indirect

effect. A large indirect effect (and consequently a greater drop between

the total effect and the direct effect) would indicate that mediation is

occurring. Since we are bootstrapping, we can tell the significance

through confidence intervals. If the range between the lower CI and the

upper CI contains zero, then the indirect effect is not significant

(i.e., a population where the indirect effect is 0 could

have produced our data). If this range does not contain zero, then we

have a significant mediation effect.

3. Plot data

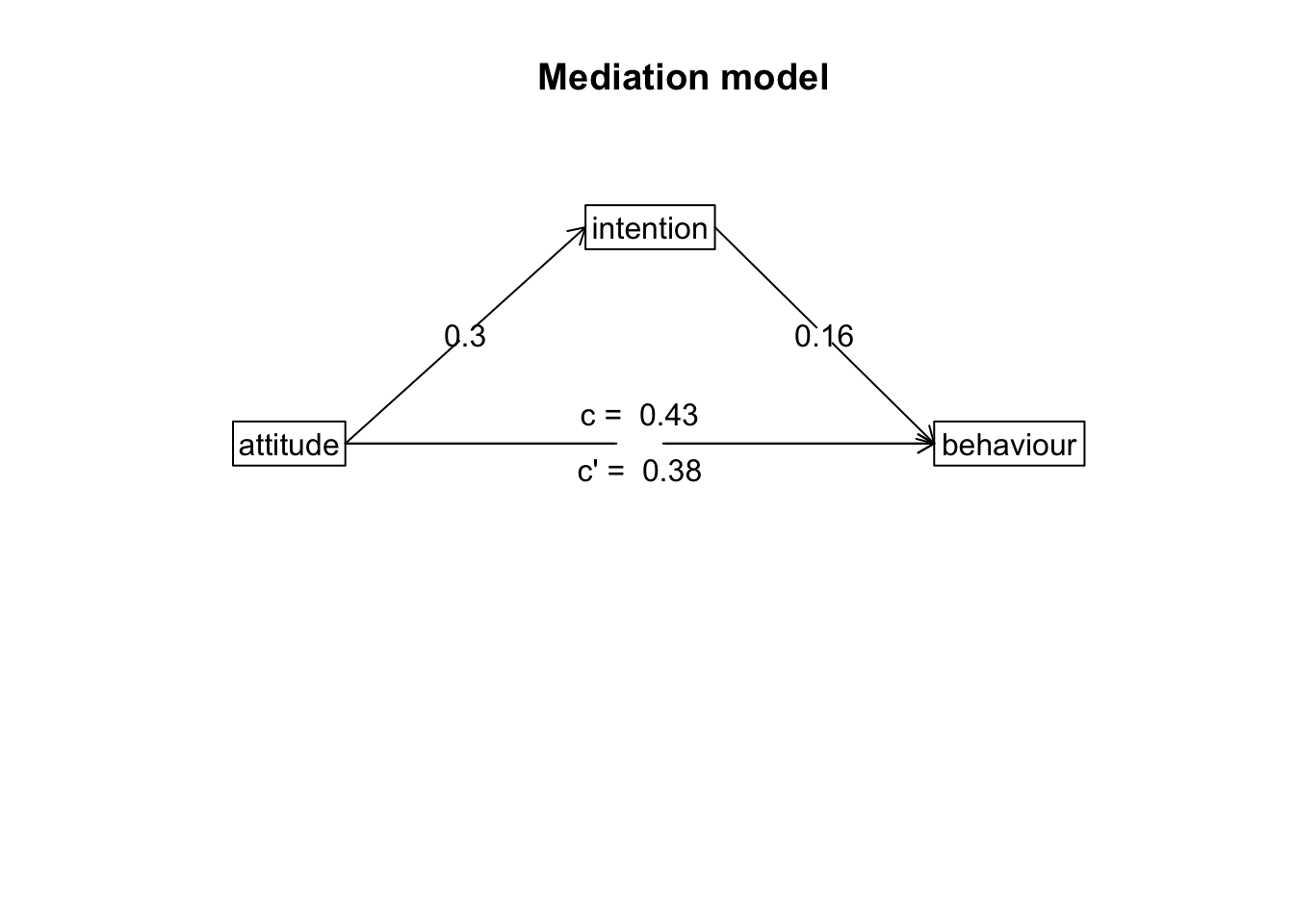

Path Diagram

For mediation, there’s no good way to plot the raw data that

visualises the mediation. The most common way to visualise a mediated

effect is through a path diagram. You can do this directly in the

mediate() function by setting the ‘plot’ argument to

TRUE or use the mediate.diagram() like

below:

mediate.diagram(model)

4. Write-up analysis.

There are several things you need to include when writing up a

mediation analysis. Writing up a mediation analysis includes reporting

the total and direct effects (in Model 1 and Model 3 above respectively,

but these numbers are also included in the output for the

mediate() function), and also the estimated indirect effect

and associated confidence intervals. Usually, you would want to

accompany the write-up with a path diagram such as the one above.

A mediation analysis via bootstrapping was conducted to assess whether the association between attitudes towards exercise and exercise behaviour was mediated by intentions to exercise. The total effect of exercise attitudes on behaviour was significant (beta = 0.43, p < .001). When intentions to exercise was included into the model, the direct effect of attitudes on behaviour was still significant (beta = 0.38, p = 0). Mediation analysis found a non-significant indirect effect (mean bootstrapped indirect effect = 0.38, 95% CI = -0.02, 0.13). This would indicate intentions to exercise does not mediates the association between exercise attitudes and exercise behaviour.

Note: While we report the results in text above, it is sometimes also easier to report the separate models in a table.