Content

We will be using the following packages in this week’s demonstration.

If you’ve been following along with the demonstrations, this is the

first time we’ve used the lavaan and semPlot

packages, so make sure to install these first before loading them. The

lavaan package includes functions that allow us to conduct

the analyses, while the semPlot package includes functions

that allows us to visualise path diagrams.

library(lavaan)

library(tidyverse)

library(semPlot)Before We Continue…

We are about to start using the lavaan package, which is

used for path analyses and structural equation models. This

demonstration is only meant to serve as an introduction to the

lavaan package, and therefore we will only discuss some of

the basic functions. You are able to add a lot of complexity to

structural equation models that we will not be touching. If you truly

wish to master this package, you will need to go beyond the content

covered here.

Mediation Analysis Through Structural Equation Modelling (SEM)

Mediation is an example of a simple SEM. We will first re-run the

mediation we conducted in Demonstration 8 in the lavaan

package, as this is a handy way of demonstrating the logic of the

lavaan package. As a reminder, we predict that the

association between exercise attitudes and exercise behaviour is

mediated by the intention to exercise.

As with all analyses, we will be following the basic procedure of:

- Clean the data for analysis.

- Run the statistical test.

- Plot the data.

- Write-up analysis.

1. Clean the data for analysis.

As always, our first step is to calculate the variables from the individual items.

The code below is identical to the code used for the mediation in Week 8 and used to calculate exercise attitude, intentions to exercise, and exercise behaviour.

data2.clean <- data %>%

mutate(attitude = exercise.attitude.1 + exercise.attitude.2 + exercise.attitude.3 + exercise.attitude.4 + exercise.attitude.5,

intention = exercise.intention.1 + exercise.intention.2 + exercise.intention.3 + exercise.intention.4 + exercise.intention.5,

behaviour = exercise.behaviour.1 + exercise.behaviour.2 + exercise.behaviour.3 + exercise.behaviour.4 + exercise.behaviour.5) %>%

dplyr::select(student.no,attitude,intention,behaviour) %>%

drop_na(attitude) %>%

drop_na(intention) %>%

drop_na(behaviour)2. Run statistical test.

Previously, when specifying a regression formula in R, we have used the following form:

y ~ x1 + x2 + x3 + …

An SEM formula is an extention of of this, where multiple regression

equations (or similar relationships) are estimated at once. Like with a

regression, we use the ~ to specify a relationship between

an outcome (on the left), and predictors (on the right).

In the table below are some other relationships that you may use when constructing a SEM.

| operator | relationship type | example | explanation |

|---|---|---|---|

=~ |

Latent Variable | y =~ x1 + x2 + x3… | y is measured by x1, x2, x3, etc. |

~~ |

Covariance | x1 ~~ x2 | x1 is correlated with x2 |

:= |

Define Parameter | effect := a*b | Estimate ‘effect’ which is made up of ‘a’ times ‘b’ |

Note: you can also label parameters by using the *

symbol before variables. If you give two variables the same label, it

will force the estimates to be the same (this comes in handy sometimes,

but not something we will use in this demonstration). Note: the label

you give cannot have the same name as a variable in the data.frame

(otherwise R will think you are referring to that variable and multiply

to two together!).

Below is the formula to conduct a mediation analysis identical to the one we conducted in Demonstration 8. See if you can understand each line and what relationship it is specifying before reading the breakdown. Remember, each line of code can be read as relationships between variables in the model.

sem.formula <- '

behaviour ~ c*attitude

behaviour ~ b*intention

intention ~ a*attitude

indirect := a*b

direct := c

total := direct + indirect

'Let’s break this down:

| line | explanation |

|---|---|

| behaviour ~ c*attitude | Behaviour is predicted from attitude, and this relationship is labelled ‘c’ |

| behaviour ~ b*intention | Behaviour is predicted from intention, and this relationship is labelled ‘b’ |

| intention ~ a*attitude | Intention is predicted from attitude, and this relationship is labelled ‘a’ |

| indirect := a*b | Define a parameter called ‘indirect’ which is made up of ‘a’ times ‘b’ |

| direct := c | Define a parameter called ‘direct’ which is made up of ‘c’ |

| total := direct + indirect | Define the total effect (the combination of direct and indirect effect). |

Essentially, the first three lines of the code are identical to

running the Model 1, Model 2, and

Model 3 that we ran when testing whether the conditions of

mediation were met, while the last three lines are like running the

mediate() function to get estimates for the indirect and

direct effect.

To run the SEM, we use the sem() function. Like all

analyses done previously, we need to specify two things: the formula

(which we saved as the object sem.formula) and the

data.frame. We also set values for a few other arguments. We set

‘standardized’ to TRUE so that we get standardised

estimates, ‘fit.measures’ to TRUE so we get model fit

indicators, and ‘rsquare’ to TRUE to get an r-square

statistics for the model.

med.sem.model <- sem(sem.formula,data2.clean)summary(med.sem.model,standardized = TRUE, fit.measures = TRUE, rsquare = TRUE)## lavaan 0.6-19 ended normally after 1 iteration

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 5

##

## Number of observations 88

##

## Model Test User Model:

##

## Test statistic 0.000

## Degrees of freedom 0

##

## Model Test Baseline Model:

##

## Test statistic 29.323

## Degrees of freedom 3

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 1.000

## Tucker-Lewis Index (TLI) 1.000

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -553.374

## Loglikelihood unrestricted model (H1) -553.374

##

## Akaike (AIC) 1116.748

## Bayesian (BIC) 1129.134

## Sample-size adjusted Bayesian (SABIC) 1113.356

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.000

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.000

## P-value H_0: RMSEA <= 0.050 NA

## P-value H_0: RMSEA >= 0.080 NA

##

## Standardized Root Mean Square Residual:

##

## SRMR 0.000

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Expected

## Information saturated (h1) model Structured

##

## Regressions:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## behaviour ~

## attitude (c) 0.555 0.144 3.848 0.000 0.555 0.383

## intention (b) 0.183 0.112 1.630 0.103 0.183 0.162

## intention ~

## attitude (a) 0.391 0.131 2.994 0.003 0.391 0.304

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .behaviour 33.168 5.000 6.633 0.000 33.168 0.790

## .intention 29.944 4.514 6.633 0.000 29.944 0.908

##

## R-Square:

## Estimate

## behaviour 0.210

## intention 0.092

##

## Defined Parameters:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## indirect 0.072 0.050 1.432 0.152 0.072 0.049

## direct 0.555 0.144 3.848 0.000 0.555 0.383

## total 0.626 0.139 4.492 0.000 0.626 0.432If we compare the results here to mediation, we should see that we get very similar, if not the same, results.

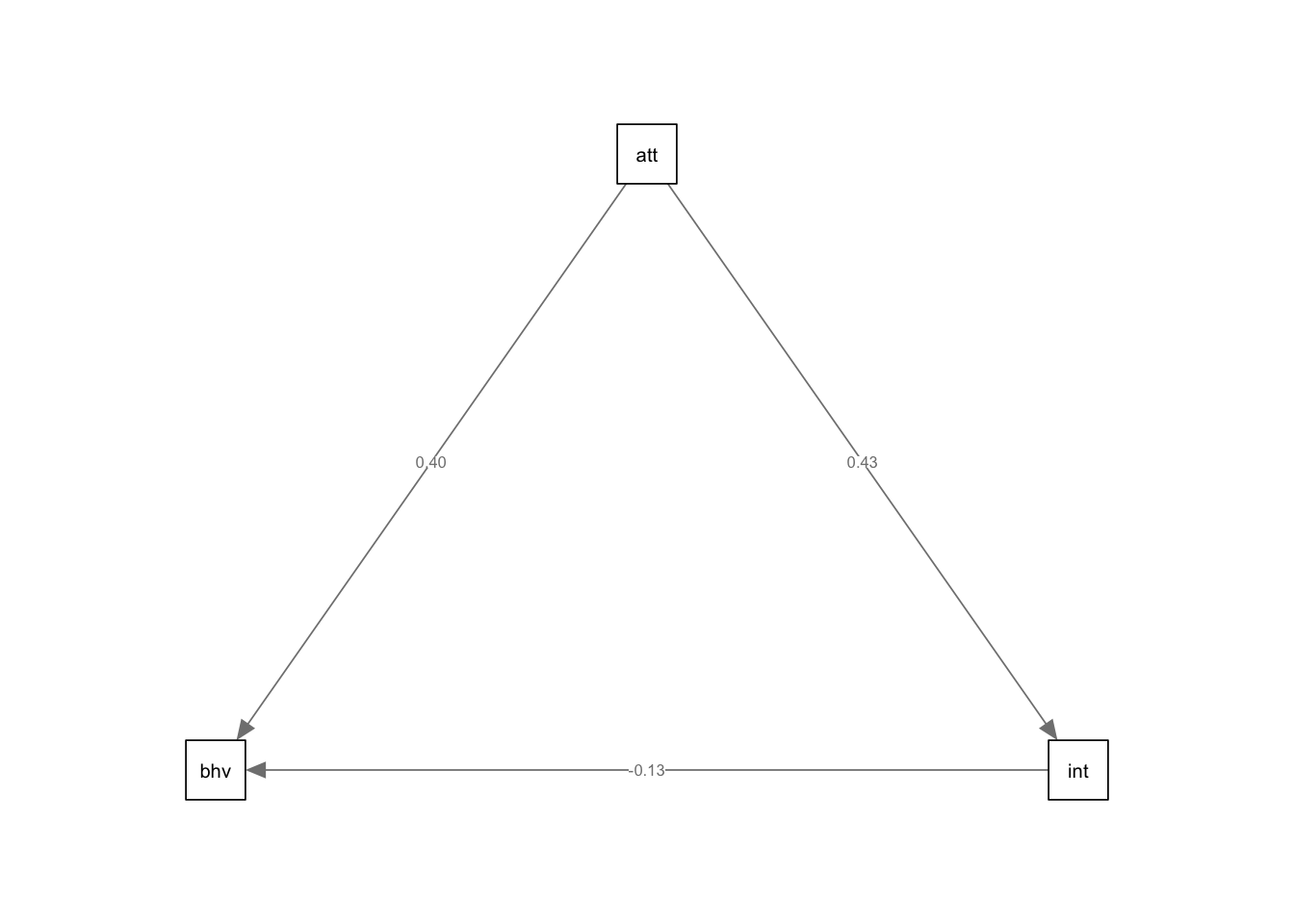

3. Plot the model.

The easiest way to plot a structural equation model is using a path

diagram. Unfortunately, this is not easy to do in ggplot2.

However, we can use the semPaths() function from the

semPlot package. This function has the advantage of being

easy to use - all you need to do is load your model estimated in laavan

and specify which paths you want to see (or hide). The disadvantage is

that editing the layout or customising the look of your path diagram can

be difficult. Therefore, in order to create publication worthy graphics,

you may want to recreate the diagram in another program.

semPaths(med.sem.model,whatLabel = "std",intercept = FALSE,residuals = FALSE)

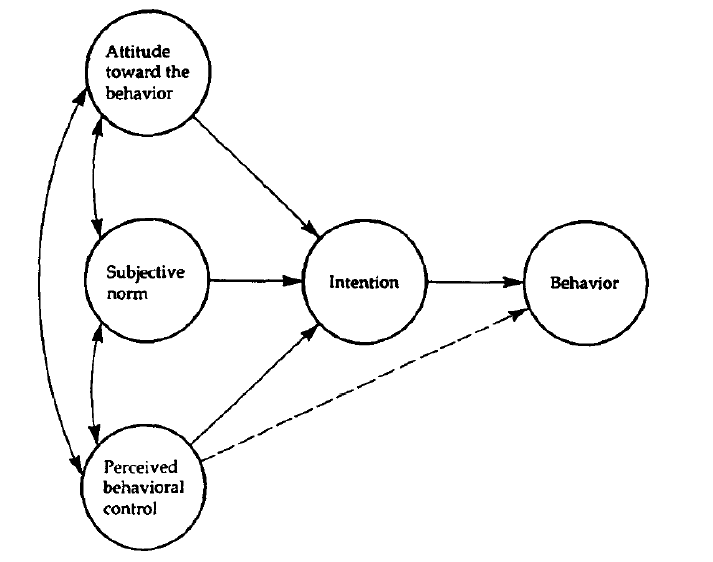

More complicated Path Analysis

We will now have a go at conducting a more complicated SEM. We will continue investigating the relationship between attitudes to exercise, intentions to exercise, and exercise behaviour, but also now investigate norms surrounding fitness and perceived behavioural control. The model we will fit is based on the Theory of Planned Behaviour, and is depicted below.

In words, we predict that attitudes towards exercise, norms about exercise, and perceived behavioural control regarding exercise will predict intention to exercise. In turn, intention to exercise predicts exercise behaviour. We also predict that perceived behavioural control regarding exercise will directly predict exercise behaviour.

1. Clean the data for analysis.

First, we must calculate the variables from each of the items. Something we have done many times now.

data2.clean <- data %>%

mutate(attitude = exercise.attitude.1 + exercise.attitude.2 + exercise.attitude.3 + exercise.attitude.4 + exercise.attitude.5,

intention = exercise.intention.1 + exercise.intention.2 + exercise.intention.3 + exercise.intention.4 + exercise.intention.5,

control = exercise.control.1 + exercise.control.2 + exercise.control.3,

norms = exercise.norms.1 + exercise.norms.2 + exercise.norms.3 + exercise.norms.4 + exercise.norms.5,

behaviour = exercise.behaviour.1 + exercise.behaviour.2 + exercise.behaviour.3 + exercise.behaviour.4 + exercise.behaviour.5) %>%

dplyr::select(student.no,attitude,intention,control,norms,behaviour) %>%

drop_na(attitude) %>%

drop_na(intention) %>%

drop_na(control) %>%

drop_na(norms) %>%

drop_na(behaviour)2. Run statistical test.

First, we need to specify the model. Below, this model is specified

as formula that lavaan can read:

model <- '

intention ~ attitude + norms + control

norms ~~ attitude

attitude ~~ control

control ~~ norms

behaviour ~ intention + control

'To break down the code above: Line 1: Attitudes, norms, and control predict intention. Lines 2 to 4: Covariance between predictors is specified explicitly. Line 5: Intention, control and attitudes predicts behaviour.

We then run the SEM model using the sem() function:

sem.model <- sem(model,data = data2.clean)summary(sem.model,standardized = TRUE,fit = TRUE, rsquare = TRUE)## lavaan 0.6-19 ended normally after 46 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 14

##

## Number of observations 88

##

## Model Test User Model:

##

## Test statistic 1.022

## Degrees of freedom 1

## P-value (Chi-square) 0.312

##

## Model Test Baseline Model:

##

## Test statistic 89.448

## Degrees of freedom 10

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 1.000

## Tucker-Lewis Index (TLI) 0.997

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -1321.988

## Loglikelihood unrestricted model (H1) -1321.477

##

## Akaike (AIC) 2671.975

## Bayesian (BIC) 2706.658

## Sample-size adjusted Bayesian (SABIC) 2662.480

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.016

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.283

## P-value H_0: RMSEA <= 0.050 0.363

## P-value H_0: RMSEA >= 0.080 0.564

##

## Standardized Root Mean Square Residual:

##

## SRMR 0.018

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Expected

## Information saturated (h1) model Structured

##

## Regressions:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## intention ~

## attitude 0.319 0.106 3.002 0.003 0.319 0.248

## norms 0.413 0.062 6.691 0.000 0.413 0.563

## control 0.079 0.139 0.564 0.573 0.079 0.048

## behaviour ~

## intention 0.115 0.109 1.055 0.291 0.115 0.102

## control 0.537 0.172 3.120 0.002 0.537 0.292

## attitude 0.499 0.138 3.618 0.000 0.499 0.344

##

## Covariances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## attitude ~~

## norms 2.902 3.739 0.776 0.438 2.902 0.083

## control 3.060 1.709 1.790 0.073 3.060 0.194

## norms ~~

## control 7.385 3.041 2.428 0.015 7.385 0.268

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .intention 19.043 2.871 6.633 0.000 19.043 0.577

## .behaviour 29.865 4.502 6.633 0.000 29.865 0.711

## attitude 19.962 3.009 6.633 0.000 19.962 1.000

## norms 61.210 9.228 6.633 0.000 61.210 1.000

## control 12.409 1.871 6.633 0.000 12.409 1.000

##

## R-Square:

## Estimate

## intention 0.423

## behaviour 0.289Notice above in the summary() function, we have included

the additional arguments to get extra statistics that are helpful when

it comes to report the analysis.

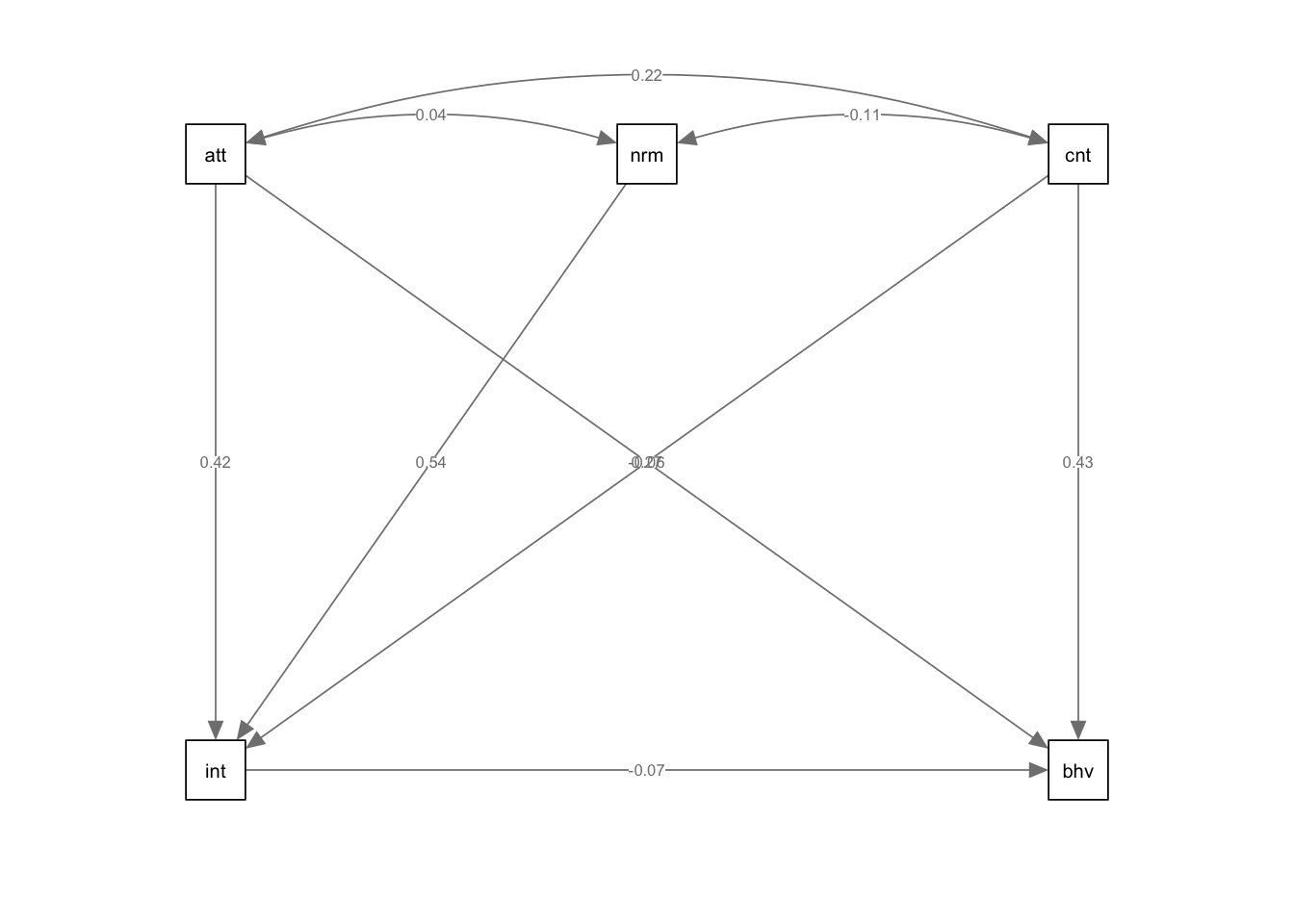

3. Plot the model.

Again, we use semPaths() to plot our SEM model. Here,

the limitations of not being able to easily organise the layout of your

plot is apparent, though it still may be helpful to visualise your

results to help your understanding.

semPaths(sem.model,whatLabel = "std",intercept = FALSE,residuals = FALSE)

Model Comparisons

Model comparisons can be used to help decide which model best explains your data with the fewest number of parameters. As covered in the lecture series, models can only be compared if they are nested (i.e., one model must be contained within the other model). For SEM, using model comparisons is particularly useful to determine the significance of individuals paths.

In the example below, we will compare the model above with a simpler model, where the path between control and behaviour is removed. The formula and code to conducted this slightly simpler model is as follows:

model2 <- '

intention ~ attitude + norms + control

norms ~~ attitude

attitude ~~ control

control ~~ norms

behaviour ~ intention

'

sem.model2 <- sem(model2,data = data2.clean)

#summary(sem.model2,standardized = TRUE,fit = TRUE, rsquare = TRUE)Note: we have not run the summary() function above to

save on space, but feel free to have a look at it.

Now that we have our two models that we will compare, the function we

use is called anova(), not to be confused with

aov() that runs ANOVA analyses.

For the anova() function, we simply have to specify the

two models we are comparing as the two arguments. This will conduct a

chi-square difference test.

anova(sem.model,sem.model2)##

## Chi-Squared Difference Test

##

## Df AIC BIC Chisq Chisq diff RMSEA Df diff Pr(>Chisq)

## sem.model 1 2672.0 2706.7 1.0215

## sem.model2 3 2690.9 2720.6 23.9366 22.915 0.34473 2 1.057e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Above, we find that there is a significant difference between the two models. Therefore, we can conclude that dropping the path between ‘control’ and ‘behaviour’ significantly reduces model fit. As such, we should retain this path in the model.

While above, we use the anova() function to test the

difference between nested SEM models, we can also use this function to

compare models from other functions, such as lm().

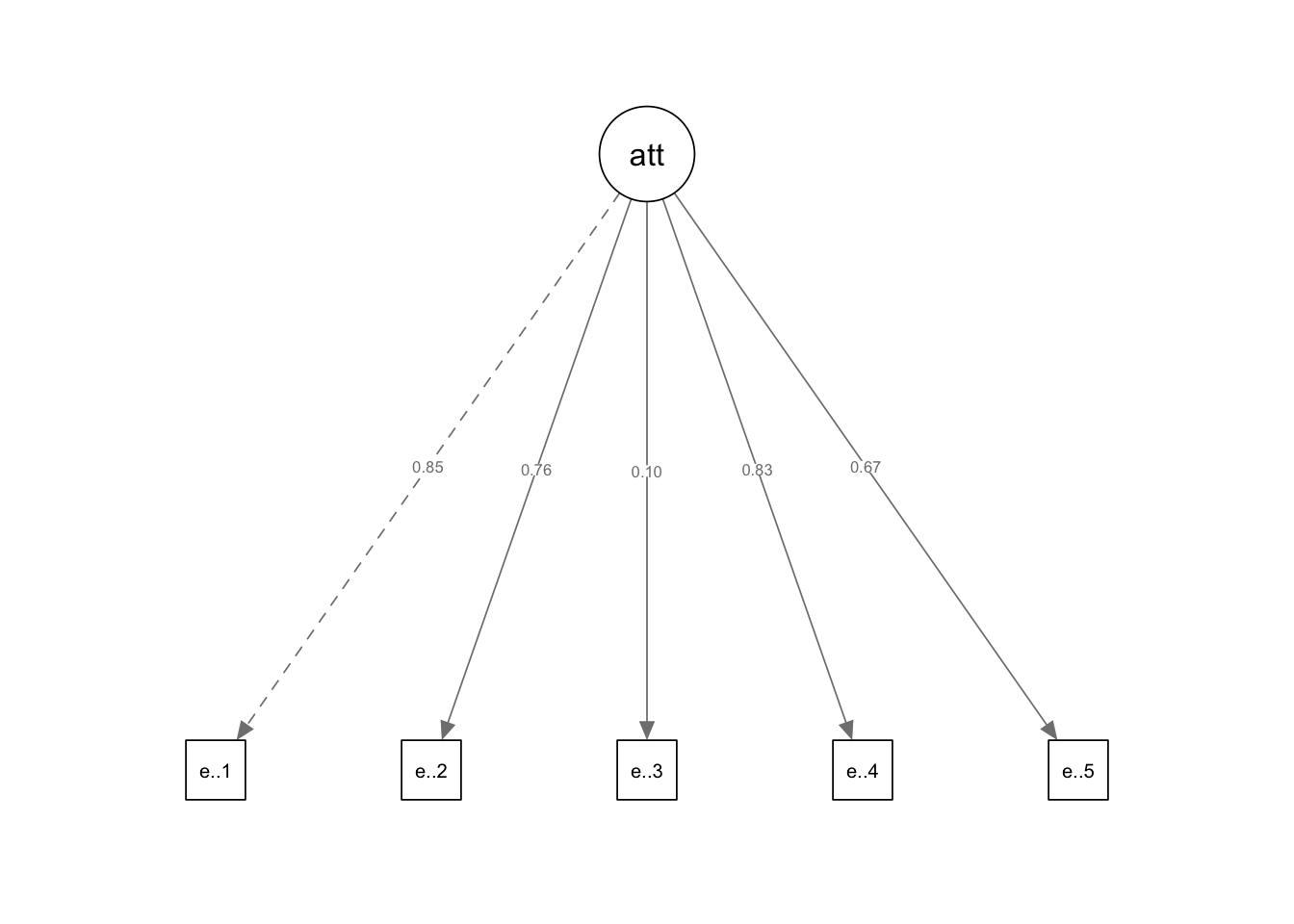

Introducing Latent Variables

Up to now, to calculate variables from individual items, we have been adding each item up. However, we could also compute latent variables, which are the underlying variables that each item is meant to tap into. Essentially, this is conducting a Confirmatory Factor Analysis.

For example, we could calculate the latent variable ‘norms’ from each of the individual items.

attitude.formula <- '

attitude =~ exercise.attitude.1 + exercise.attitude.2 + exercise.attitude.3 + exercise.attitude.4 + exercise.attitude.5

'

attitude.model <- sem(attitude.formula,data = data)summary(attitude.model,standardized = TRUE)## lavaan 0.6-19 ended normally after 29 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 10

##

## Number of observations 88

##

## Model Test User Model:

##

## Test statistic 2.077

## Degrees of freedom 5

## P-value (Chi-square) 0.838

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Expected

## Information saturated (h1) model Structured

##

## Latent Variables:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## attitude =~

## exercis.tttd.1 1.000 0.610 0.703

## exercis.tttd.2 1.420 0.275 5.159 0.000 0.867 0.669

## exercis.tttd.3 0.706 0.392 1.803 0.071 0.431 0.217

## exercis.tttd.4 1.076 0.195 5.508 0.000 0.657 0.741

## exercis.tttd.5 1.778 0.339 5.244 0.000 1.085 0.684

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .exercis.tttd.1 0.381 0.078 4.904 0.000 0.381 0.506

## .exercis.tttd.2 0.927 0.178 5.211 0.000 0.927 0.552

## .exercis.tttd.3 3.763 0.574 6.550 0.000 3.763 0.953

## .exercis.tttd.4 0.354 0.079 4.476 0.000 0.354 0.451

## .exercis.tttd.5 1.340 0.264 5.085 0.000 1.340 0.532

## attitude 0.373 0.111 3.353 0.001 1.000 1.000semPaths(attitude.model,whatLabel = "std",intercept = FALSE,residuals = FALSE)

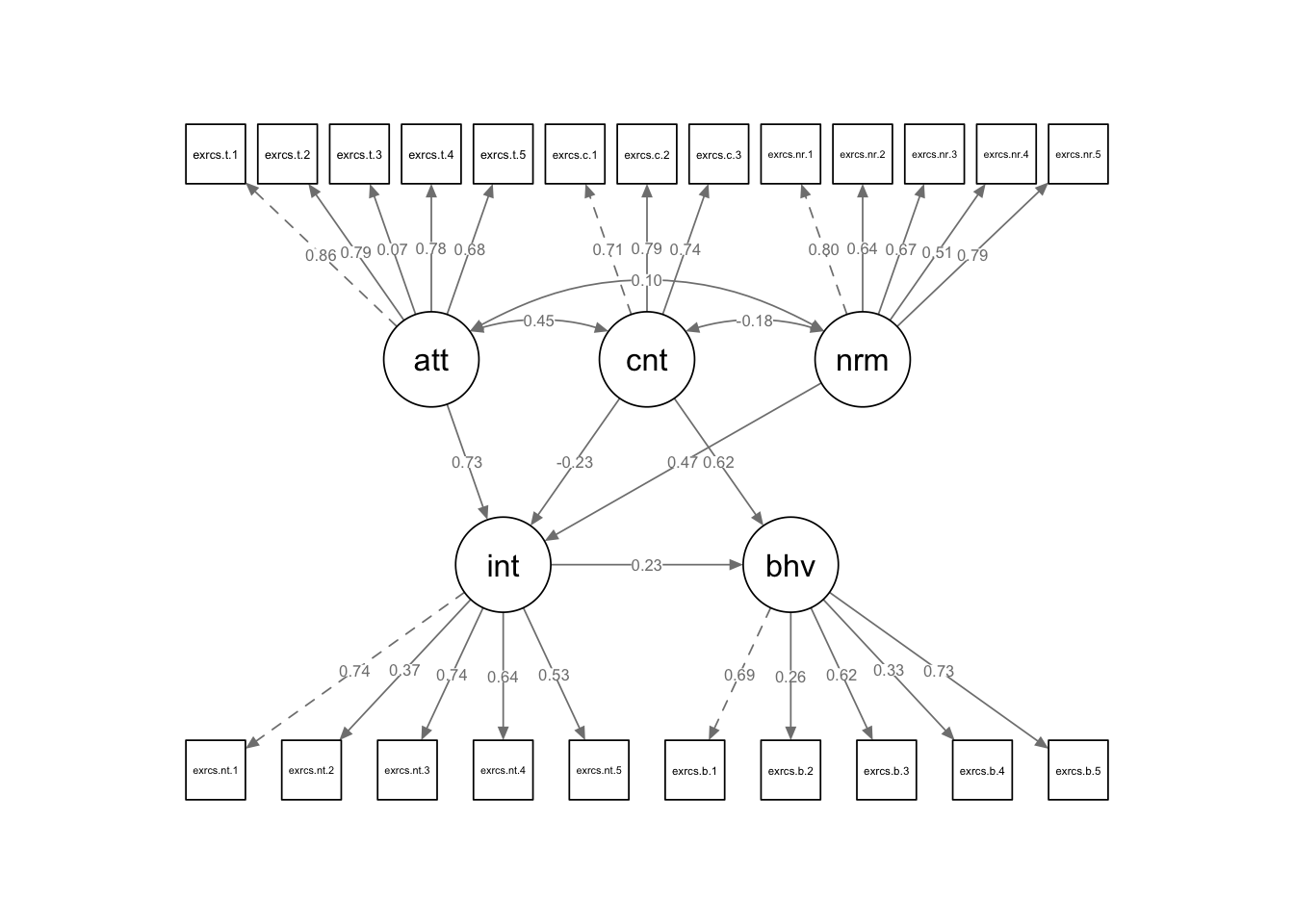

How about we compute latent variables for all the variables in the TPB model above? Doing something like this may not make a lot of sense: we will end up with a gangly model, and we may not have enough observations to get sensible results, but we can do it do it anyway! For demonstrative purposes of course… The code is provided below to run this analysis, but this code is not executed, as the output is quite long an unweildy.

Specify the Model

model <- '

attitude =~ exercise.attitude.1 + exercise.attitude.2 + exercise.attitude.3 + exercise.attitude.4 + exercise.attitude.5

intention =~ exercise.intention.1 + exercise.intention.2 + exercise.intention.3 + exercise.intention.4 + exercise.intention.5

control =~ exercise.control.1 + exercise.control.2 + exercise.control.3

norms =~ exercise.norms.1 + exercise.norms.2 + exercise.norms.3 + exercise.norms.4 + exercise.norms.5

behaviour =~ exercise.behaviour.1 + exercise.behaviour.2 + exercise.behaviour.3 + exercise.behaviour.4 + exercise.behaviour.5

intention ~ attitude + norms + control

behaviour ~ intention + control

'We won’t run the summary() just now because it is very

unwieldy, but we have covered the interpretation of the output

above.

sem.model3 <- sem(model,data = data)

summary(sem.model3,standardized = TRUE,fit = TRUE, rsquare = TRUE)