Content

Before we begin…

Remember, whenever we analyse data, we will roughly be following this procedure:

- Clean the data for analysis.

- Run the statistical test.

- Plot the data.

- Write-up analysis.

We will be using the following packages. If this is your first time using these packages, remember to install them before loading the packages.

library(tidyverse)

library(lm.beta)Reminder: Moderation (Interaction Effects)

As covered in the Lecture series, moderation is when the effect of an IV (predictor) on the DV (outcome) depends on another IV (predictor). We can test for an interaction effect in a linear regression.

In the example below, we will extend the regression we conducted last week and test the hypothesis that the association between sleep quality and stress is moderated by social support (for instance, the relationship between sleep quality and stress is more negative for participants low in social support).

Regression with Interaction Effect

1. Clean the data for analysis.

First we must calculate the scores for each scale in the analysis from the individual items. As we have done previously, we can do this by using the mutate() function. The code below is the same as the code we used last week. Remember to reverse code sleep quality so that higher scores indicate greater sleep quality.

data1.vars <- data %>%

mutate(stress = stress.1 + stress.2 + stress.3 + stress.4 + stress.5,

support = support.1 + support.2 + support.3 + support.4 + support.5,

sleep.quality = sleep1 + sleep2 + sleep3 + sleep4 + sleep5,

sleep.quality = sleep.quality * - 1) %>%

dplyr::select(student.no,stress,support,sleep.quality)When including interaction terms in a linear regression, including uncentered variables can be problematic. In order to center the variables, we can use the scale() function. The scale() function expects a numeric vector. There are two additional arguments called center and scale. If center is set to TRUE, but scale is set to FALSE, the scale() function will output the ‘centred’ variable. If both arguments are set to TRUE, the scale() function will return a ‘standardised’ argument.

Because of a quirk with the scale() function, we also need to tell R that the output is a vector. We can do this by wrapping the results from the scale() function inside a c() function.

You can see the scale() function in action below:

v <- c(3,32,5,6,12,59,96)

#Get the centered variable.

c.v <- c(scale(v,center = TRUE,scale = FALSE))

c.v## [1] -27.428571 1.571429 -25.428571 -24.428571 -18.428571 28.571429 65.571429#Get the standardised variable.

z.v <- c(scale(v, center = TRUE,scale = TRUE))

z.v## [1] -0.7782022 0.0445845 -0.7214583 -0.6930863 -0.5228546 0.8106273 1.8603896We can use this combination of the scale() and c() functions within the mutate() to calculate the standardised/centred variables of columns in our data.frame:

#Compute centred variables for analysis.

data1.clean <- mutate(data1.vars,

c.stress = c(scale(stress,center = TRUE,scale = FALSE)),

c.support = c(scale(support,center = TRUE,scale = FALSE))) %>%

#Compute standardised variables.

mutate(z.sleep.quality = c(scale(sleep.quality,center = TRUE,scale = TRUE)),

z.support = c(scale(support,center = TRUE,scale = TRUE)),

z.stress = c(scale(stress,center = TRUE,scale = TRUE)))2. Run statistical test

Recall that interaction effects are the multiplication of the two variable. Therefore, to specify an interaction, we change the formula we specify to include the multiplication of the variable whose interaction we are interested in. For the unstandardised model, make sure you include the centred variables in the formula.

#Unstandardised Model

model1 <- lm(sleep.quality ~ c.stress*c.support,data = data1.clean)

summary(model1)##

## Call:

## lm(formula = sleep.quality ~ c.stress * c.support, data = data1.clean)

##

## Residuals:

## Min 1Q Median 3Q Max

## -10.557 -3.487 0.973 3.485 11.847

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -13.72107 0.53488 -25.653 < 2e-16 ***

## c.stress -1.25686 0.28349 -4.433 2.79e-05 ***

## c.support 0.02072 0.08532 0.243 0.809

## c.stress:c.support 0.04415 0.06147 0.718 0.475

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.017 on 84 degrees of freedom

## Multiple R-squared: 0.1911, Adjusted R-squared: 0.1622

## F-statistic: 6.614 on 3 and 84 DF, p-value: 0.0004577Notice how R automatically includes the main effects in the model? In most cases, you will want to include the separate main effects when including an interaction term, but in the odd occassion when you want to include the interaction effect without the main effect, you can specify it using the : symbol. In other words:

sleep.quality ~ stress*support is identical to sleep.quality ~ stress + support + stress:support

Above are the unstandardised coefficients. However, in order to report in APA format, we require the standardised coefficient. Similar to with an ordinary regression, we can use the lm.beta() function to get the standardised coefficients, like here:

#Standardised Model

model1 %>%

lm.beta() %>%

summary()##

## Call:

## lm(formula = sleep.quality ~ c.stress * c.support, data = data1.clean)

##

## Residuals:

## Min 1Q Median 3Q Max

## -10.557 -3.487 0.973 3.485 11.847

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) -13.72107 0.00000 0.53488 -25.653 < 2e-16 ***

## c.stress -1.25686 -0.43681 0.28349 -4.433 2.79e-05 ***

## c.support 0.02072 0.02399 0.08532 0.243 0.809

## c.stress:c.support 0.04415 0.07120 0.06147 0.718 0.475

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 5.017 on 84 degrees of freedom

## Multiple R-squared: 0.1911, Adjusted R-squared: 0.1622

## F-statistic: 6.614 on 3 and 84 DF, p-value: 0.00045773. Plot data

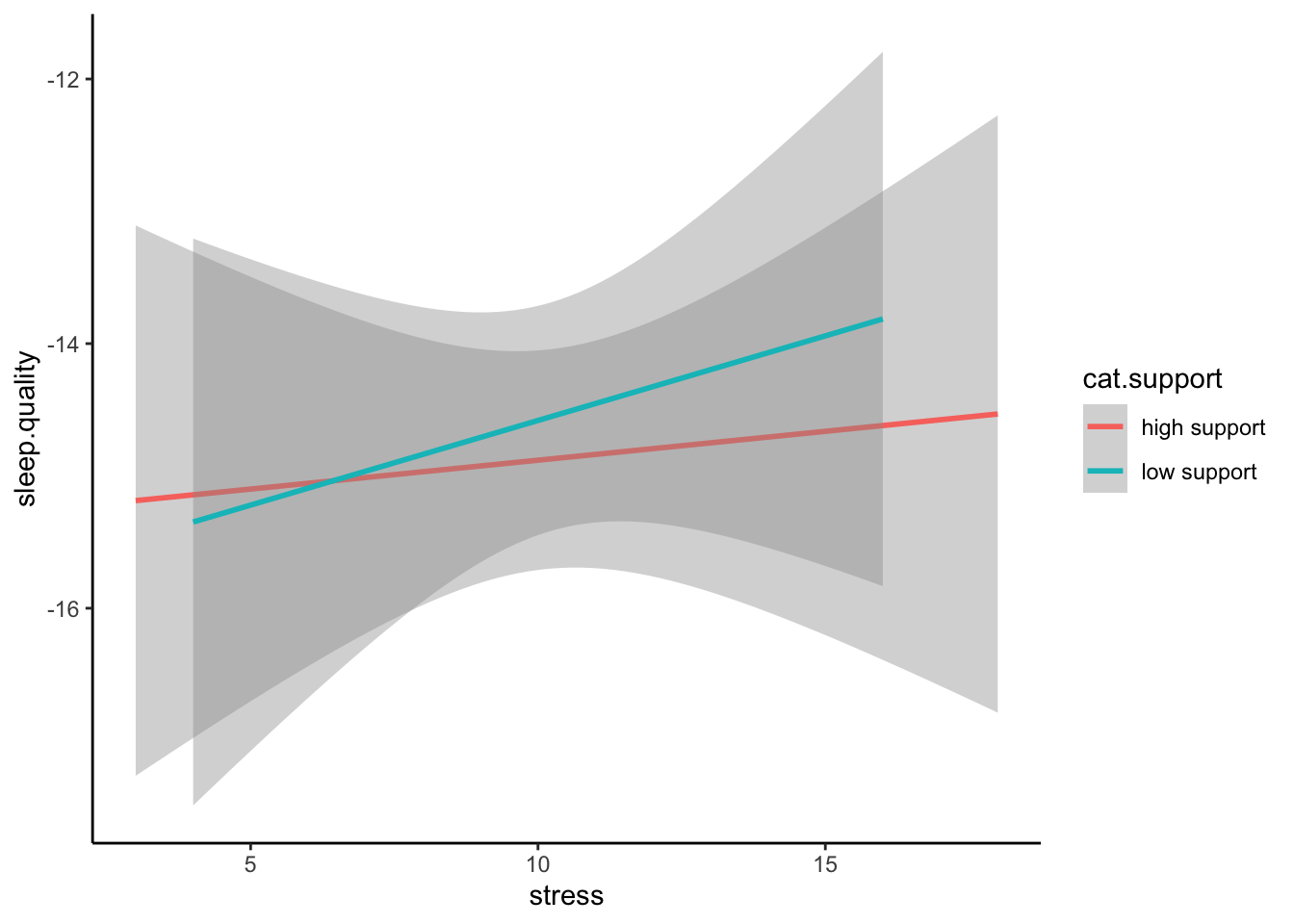

Interactions can often be difficult to intuit from just looking at the numbers in the model. Therefore, it is almost always necessary to plot them. The most common way to plot an interaction is to split the dataset in two according to the moderator: one with participants who score above the mean on the moderator, and the other with participants who score below the mean on the moderator. In our example, this means splitting the data into participants who are above and below the mean in social support. We can do this by creating a new variable using the ifelse() function within the mutate() function.

The ifelse() function works by first specify a condition as the first argument. The second argument is what happens if data from a participant meets that condition. The third arguement is what happens if a participant does not meet that condition. So in the code below, we are creating a new variable called ‘cat.support’. We want to categorise support into two levels, so the condition in the ifelse() function is z.support > 0. Since we standardised the moderator during the cleaning scale. The mean for support equals 0, so we can split the data on this. Participants who meet this condition are in the “high support” group, while those that are not are in the “low support” group.

plot.data <- mutate(data1.clean,cat.support = ifelse(z.support > 0,"high support","low support")) %>%

filter(!is.na(cat.support))We then can plot the regression line adding in a ‘group’ and ‘colour’ aesthetic to separate our data of participants with high and low support.

ggplot(plot.data,mapping = aes(x = stress,y = sleep.quality,group = cat.support,colour = cat.support)) +

geom_smooth(method = "lm") +

theme_classic()

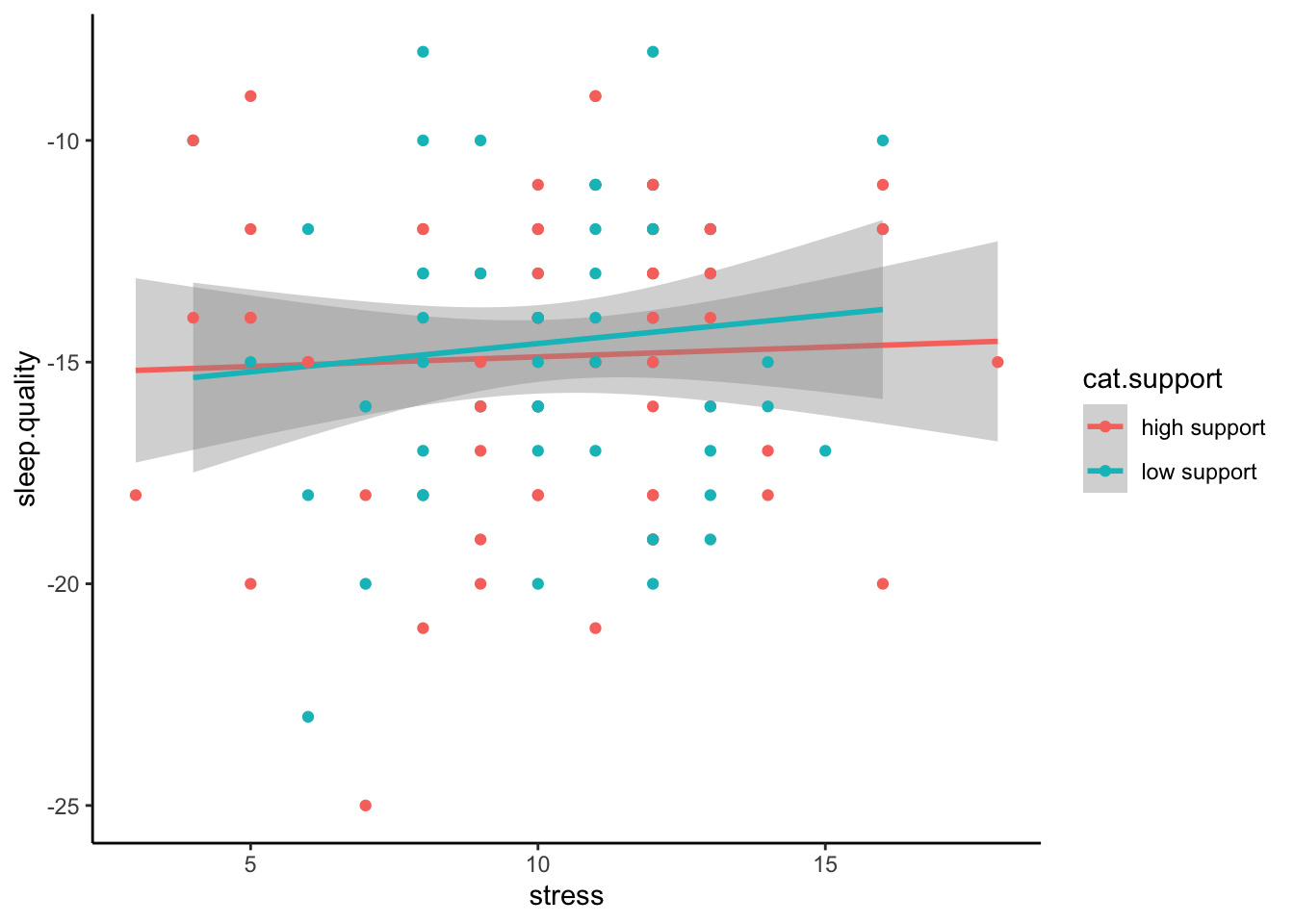

Even better is if can visualise the raw data in a scatterplot:

ggplot(plot.data,mapping = aes(x = stress,y = sleep.quality,group = cat.support,colour = cat.support)) +

geom_smooth(method = "lm") +

geom_point() +

theme_classic()

4. Write-up analysis.

Given that a moderation is exactly the same as a regression, we require the same information to do the write-up. As a reminder, here are the components you need to write up a regression:

For the model, you need the following information:

- the R-squared statistic.

- the F-statistic and associated degrees of freedom.

- the p-value for the model.

For each predictor, you need the following information:

- the standardised coefficient.

- the t-statistic.

- the p-value for that coefficient.

As mentioned last week, with more than one predictor in the model, it may make more sense to report the statistics in a table. This includes models with interaction effects (in the case above, the interaction effect is our third predictor).

Here is an example of the write-up:

We used a linear regression to predict sleep quality from the level of perceived stress, level of social support, and the interaction between the two. We found that model explained 19.11% of the variance (F(3,84) = 6.61, p = 0). Regression coefficients are reported in Table 1. There was a significant, negative main effect of stress on sleep quality. There was no significant main effect of social support on sleep quality. The interaction between perceived stress and social support was not significant.

Table 1. Regression coefficients for linear model predicting stress.

| predictor | beta | t | p-value |

|---|---|---|---|

| Perceived Stress | -0.44 | -4.43 | 0 |

| Social Support | 0.02 | 0.24 | 0.809 |

| PS * SS | 0.07 | 0.72 | 0.475 |

Two-Way Between-Subjects ANOVA

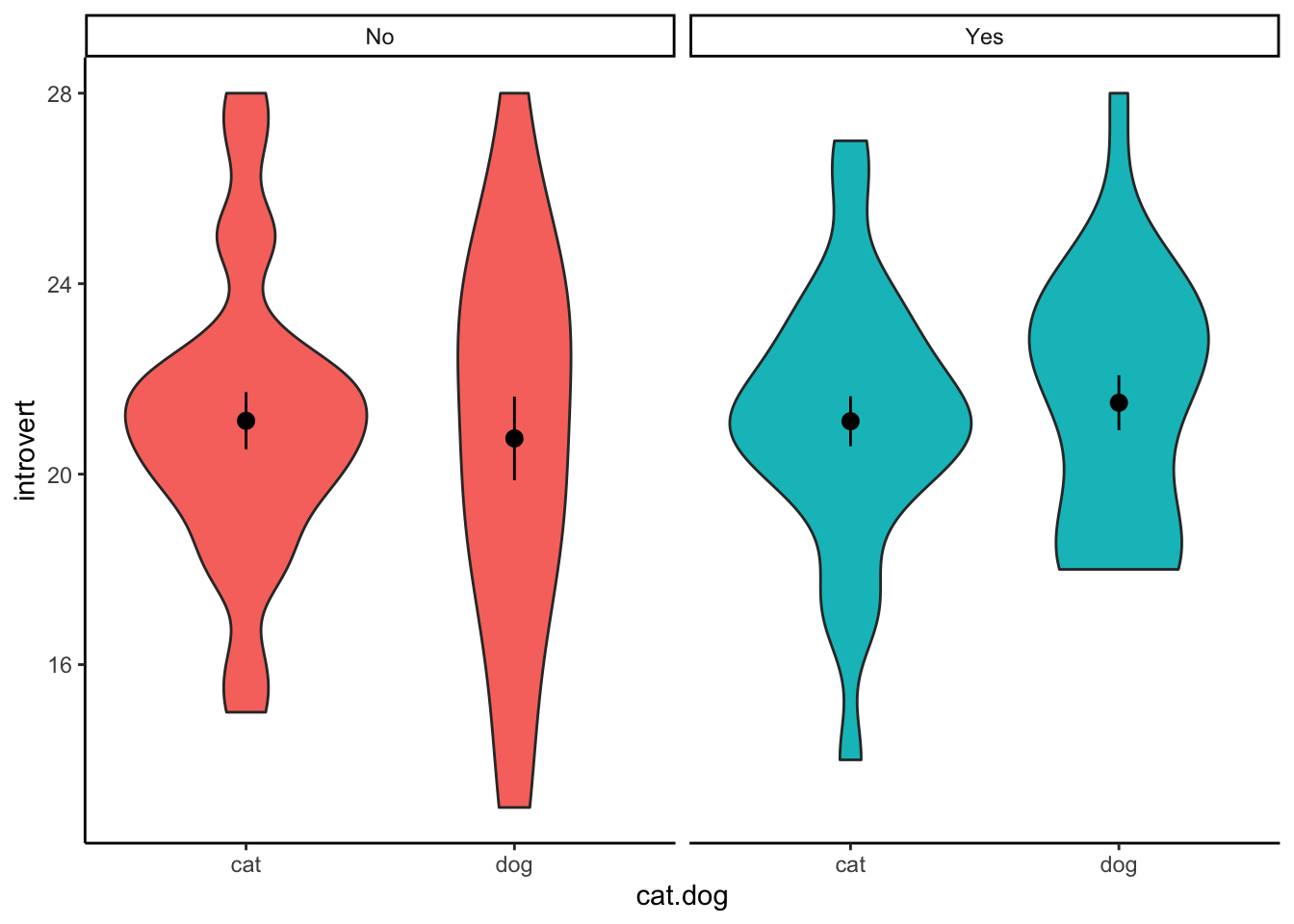

A two-way ANOVA is used when you want to evaluate the effects of two categorical IVs on a continuous DV. Much of what we have covered regarding a linear regression with multiple predictors applies with a two-way ANOVA, but with two categorical IVs. In the example below, we will test whether there is an association between between introversion and identifying as either a cat- or dog-person, and whether this association differs depending on whether you play video-games or not.

1. Clean the data for analysis.

clean.data2 <- data %>%

filter(cat.dog != "both") %>%

filter(cat.dog != "neither") %>%

filter(cat.dog != "") %>%

mutate( introvert = introversion2 + introversion5 + introversion7 + introversion8 + introversion10,

introvert = (introvert * -1) + 6*6 ) %>%

select(introvert,video.games,cat.dog)2. Run statistical test

The function to run a two-way ANOVA is the same as a one-way ANOVA: aov(). R is smart enough to determine which statistical test to run based on how many IVs are in the formula. The formula works the same as an interaction in a regression, where both categorical IVs are “multiplied” together. R will automatically include the main effects for each IV and the interaction. Also, similar to the one-way ANOVA, in order to get output that is interpretable, you can pipe the result to the summary() function.

aov(introvert ~ cat.dog*video.games,data = clean.data2) %>%

summary()## Df Sum Sq Mean Sq F value Pr(>F)

## cat.dog 1 83.9 83.91 5.536 0.0229 *

## video.games 1 11.4 11.40 0.752 0.3902

## cat.dog:video.games 1 88.2 88.20 5.819 0.0198 *

## Residuals 47 712.4 15.16

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Similar to a one-way ANOVA, the two-way ANOVA will tell you whether or not there is a difference, but it will not tell you where that difference is. In order to determine this, you will need to calculate summary statistics (e.g., means for each cell) and conduct follow-up comparisons.

Calculate Summary Statistics

clean.data2 %>%

group_by(video.games,cat.dog) %>%

summarise(

count = n(),

mean = mean(introvert,na.rm = TRUE),

sd = sd(introvert,na.rm = TRUE)

)## # A tibble: 4 × 5

## # Groups: video.games [2]

## video.games cat.dog count mean sd

## <chr> <chr> <int> <dbl> <dbl>

## 1 No cat 8 19 3.12

## 2 No dog 22 18.5 3.95

## 3 Yes cat 5 24.6 2.88

## 4 Yes dog 16 17.9 4.35Multiple Comparisons

To assess the significant interaction, we test whether the difference between cat-people and dog-people differs depending on whether they play video-games or not.

t.test(introvert ~ cat.dog,data = filter(clean.data2,video.games == "Yes" & (cat.dog == "cat" | cat.dog == "dog")))##

## Welch Two Sample t-test

##

## data: introvert by cat.dog

## t = 3.9889, df = 10.33, p-value = 0.002405

## alternative hypothesis: true difference in means between group cat and group dog is not equal to 0

## 95 percent confidence interval:

## 2.984741 10.465259

## sample estimates:

## mean in group cat mean in group dog

## 24.600 17.875t.test(introvert ~ cat.dog,data = filter(clean.data2,video.games == "No" & (cat.dog == "cat" | cat.dog == "dog")))##

## Welch Two Sample t-test

##

## data: introvert by cat.dog

## t = 0.39333, df = 15.766, p-value = 0.6993

## alternative hypothesis: true difference in means between group cat and group dog is not equal to 0

## 95 percent confidence interval:

## -2.397861 3.488770

## sample estimates:

## mean in group cat mean in group dog

## 19.00000 18.454553. Plot data

When plotting the data, we want to visualise the relationship between introversion and cat-people/dog-people separated by the moderator - whether participants play video-games or not. We can do this by adding a facet_wrap() to our standard violin plot. Here, we only need to specify which variable to separate the plot on.

ggplot(clean.data2,aes(x = cat.dog,y = introvert,fill = video.games)) +

geom_violin() +

stat_summary() +

facet_wrap(~ video.games) +

theme_classic() +

theme(legend.position = "none")

Mixed-Design ANOVA

In the two-way ANOVA above, both IVs were between-subjects variables. However, the aov() can also run an ANOVA when one (or both) IVs are within-subjects. These are known as mixed-designs ANOVAs (or repeated-measures ANOVA if both IVs are within-subjects).

In the example below, we will test whether playing video games moderates the association between intended alcoholic drinks before and after viewing the Scottish government’s drinking guidelines.

1. Clean data for analysis.

Below, we select the key variables for analysis and reshape the data. This second step is necessary since we are dealing with within-subjects variables. Also, since we will be group data by the student.no, we will need to ensure that R treats it as a factor.

mx.data <- data %>%

select(student.no,video.games,pre.drink,post.drink) %>%

filter(!is.na(pre.drink),!is.na(post.drink)) %>%

gather(key = "condition",value = "alcohol",pre.drink,post.drink) %>%

mutate(student.no = as.factor(student.no))2. Conduct statistical test.

Again, we use the aov() function to run our mixed-design ANOVA. However, in order to tell R which factor is within-subjects, we need to adjust our formula to the following format:

DV ~ IV1*IV2 + Error(ID/IV2)So much like before, the DV is on the left of the ~ symbol, and the IVs are on the right. In order to denote that we are interested in the interaction between the two, we continue to “multiply” the IVs together. The new part of the formula comes where we add to the formula the “Error” part above. This tells R 1) what is the within-subjects variable, and 2) how that within-subjects variable is grouped. In our example, condition is the within-subjects variable, and since the data is within-participants, we will use student.no to tell R which observations are linked.

aov(alcohol ~ condition*video.games + Error(student.no/condition),data = mx.data) %>%

summary()##

## Error: student.no

## Df Sum Sq Mean Sq F value Pr(>F)

## video.games 1 571 571.1 9.742 0.00269 **

## Residuals 65 3810 58.6

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Error: student.no:condition

## Df Sum Sq Mean Sq F value Pr(>F)

## condition 1 5.04 5.045 2.957 0.0902 .

## condition:video.games 1 0.07 0.075 0.044 0.8347

## Residuals 65 110.88 1.706

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Notice in the output above that there are two ANOVA tables. The first is the between-subjects effects, which reports the main effect for video.games on alcohol consumption. Interestingly, this is significant in the above example. The second table has the within-subjects effects. This includes the main effect of condition, plus the interaction between our two IVs. In the example above, the interaction is non-significant, indicating that playing video games does not moderate the intended consumption of alcohol before and after viewing the Scottish government’s guidelines.

Mediation

As covered in the Lecture series, mediation describes a relationship where the influence of one variable on another can be explained through a third variable. In the example below, we will test whether the relationship between attitudes towards exercise and exercise behaviour can be explained through intentions to exercise (i.e., individuals who have positive attitudes about exercise increase their intention to exercise, which in turn increases exercise behaviour). For more information on these scales (and some of the ones we will use later), see this paper: https://search.proquest.com/docview/202682863. Note, we only use the first 5-items on each scale to keep things simple.

1. Clean data for analysis.

First, we must calculate the variables that we need for our analysis. This process should be fairly familiar by now.

data.clean <- data %>%

mutate(attitude = exercise.attitude.1 + exercise.attitude.2 + exercise.attitude.3 + exercise.attitude.4 + exercise.attitude.5,

intention = exercise.intention.1 + exercise.intention.2 + exercise.intention.3 + exercise.intention.4 + exercise.intention.5,

behaviour = exercise.behaviour.1 + exercise.behaviour.2 + exercise.behaviour.3 + exercise.behaviour.4 + exercise.behaviour.5) %>%

dplyr::select(student.no,attitude,intention,behaviour) %>%

filter(!is.na(attitude)) %>%

filter(!is.na(intention)) %>%

filter(!is.na(behaviour))2. Run statistical test

Remember, mediation is when the effect of one IV could be explained through a third variable (mediation). If there is an effect in a model without the mediator, but that effect is reduced (or disappears) when the mediator is included, there is a chance the mediation is happening. In order to check whether our variables meet these conditions, we need to conduct a number of linear regressions.

Model 1

Here, we test whether there is an association between the predictor (attitudes) and the outcome variable (behaviour):

lm(behaviour ~ attitude,data = data.clean) %>%

lm.beta() %>%

summary()##

## Call:

## lm(formula = behaviour ~ attitude, data = data.clean)

##

## Residuals:

## Min 1Q Median 3Q Max

## -13.3222 -5.3853 0.6778 5.2905 16.8759

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) 9.7999 0.0000 1.2986 7.546 4.59e-11 ***

## attitude 0.7748 0.4539 0.1650 4.697 1.01e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 6.923 on 85 degrees of freedom

## Multiple R-squared: 0.2061, Adjusted R-squared: 0.1967

## F-statistic: 22.06 on 1 and 85 DF, p-value: 1.006e-05Model 2

Here, we test whether including the mediator (intention) in the model changes the relationship between the predictor (attitude) and the outcome variable (behaviour):

lm(behaviour ~ attitude + intention,data = data.clean) %>%

lm.beta() %>%

summary()##

## Call:

## lm(formula = behaviour ~ attitude + intention, data = data.clean)

##

## Residuals:

## Min 1Q Median 3Q Max

## -14.9584 -5.8781 0.4981 4.9684 16.6849

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) 9.4906 0.0000 1.3090 7.250 1.88e-10 ***

## attitude 0.6535 0.3829 0.1848 3.537 0.000662 ***

## intention 0.2160 0.1540 0.1518 1.423 0.158488

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 6.882 on 84 degrees of freedom

## Multiple R-squared: 0.2247, Adjusted R-squared: 0.2063

## F-statistic: 12.18 on 2 and 84 DF, p-value: 2.274e-05Model 3

Also, in order for there to be a mediation, we must observe a relationship between the predictor (attitude) and the mediator (intention):

lm(intention ~ attitude,data = data.clean) %>%

lm.beta() %>%

summary()##

## Call:

## lm(formula = intention ~ attitude, data = data.clean)

##

## Residuals:

## Min 1Q Median 3Q Max

## -12.4319 -3.4219 0.5168 2.9099 12.4456

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) 1.4319 0.0000 0.9224 1.552 0.124

## attitude 0.5612 0.4610 0.1172 4.790 7e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 4.918 on 85 degrees of freedom

## Multiple R-squared: 0.2126, Adjusted R-squared: 0.2033

## F-statistic: 22.94 on 1 and 85 DF, p-value: 6.995e-06Mediation Analysis

While we may or may not meet the conditions for a mediation above, we will continue with the analysis to demonstrate the process of conducting a mediation analysis regardless.

In order to conduct a mediation analysis in R, we will load the psych package. If you haven’t installed the psych package yet, make sure to do this before loading the psych package.

library(psych)The function that runs the mediation analysis is aptly named mediate(). Like all analysis functions, the mediate() function accepts a formula and a data.frame, but also a couple of options that we will want to change. For the formula, the mediate function takes a specific form, where the mediator is put inside brackets on the right-hand side of the ~ symbol:

DV ~ IV + (Mediator)So for our analysis, the code becomes the following. Note, we also want to set the ‘std’ argument to TRUE to ensure we receive standardised estimates, and the ‘plot’ argument to FALSE so we are only seeing the numeric output (we will see the plot later).

model <- mediate(behaviour ~ attitude + (intention),data = data.clean,std = TRUE,plot = FALSE)

model##

## Mediation/Moderation Analysis

## Call: mediate(y = behaviour ~ attitude + (intention), data = data.clean,

## std = TRUE, plot = FALSE)

##

## The DV (Y) was behaviour . The IV (X) was attitude . The mediating variable(s) = intention .

##

## Total effect(c) of attitude on behaviour = 0.45 S.E. = 0.1 t = 4.7 df= 85 with p = 1e-05

## Direct effect (c') of attitude on behaviour removing intention = 0.38 S.E. = 0.11 t = 3.54 df= 84 with p = 0.00066

## Indirect effect (ab) of attitude on behaviour through intention = 0.07

## Mean bootstrapped indirect effect = 0.07 with standard error = 0.06 Lower CI = -0.03 Upper CI = 0.2

## R = 0.47 R2 = 0.22 F = 12.18 on 2 and 84 DF p-value: 1.08e-06

##

## To see the longer output, specify short = FALSE in the print statement or ask for the summaryMost of the information above is what we have encountered previously. The main information we are interested in this output is the line on the mean bootstrapped indirect effect. A large indirect effect (and consequently a greater drop between the total effect and the direct effect) would indicate that mediation is occurring. Since we are bootstrapping, we can tell the significance through confidence intervals. If the range between the lower CI and the upper CI contains zero, then the indirect effect is not significant. If this range does not contain zero, then we have a significant mediation effect.

3. Plot data

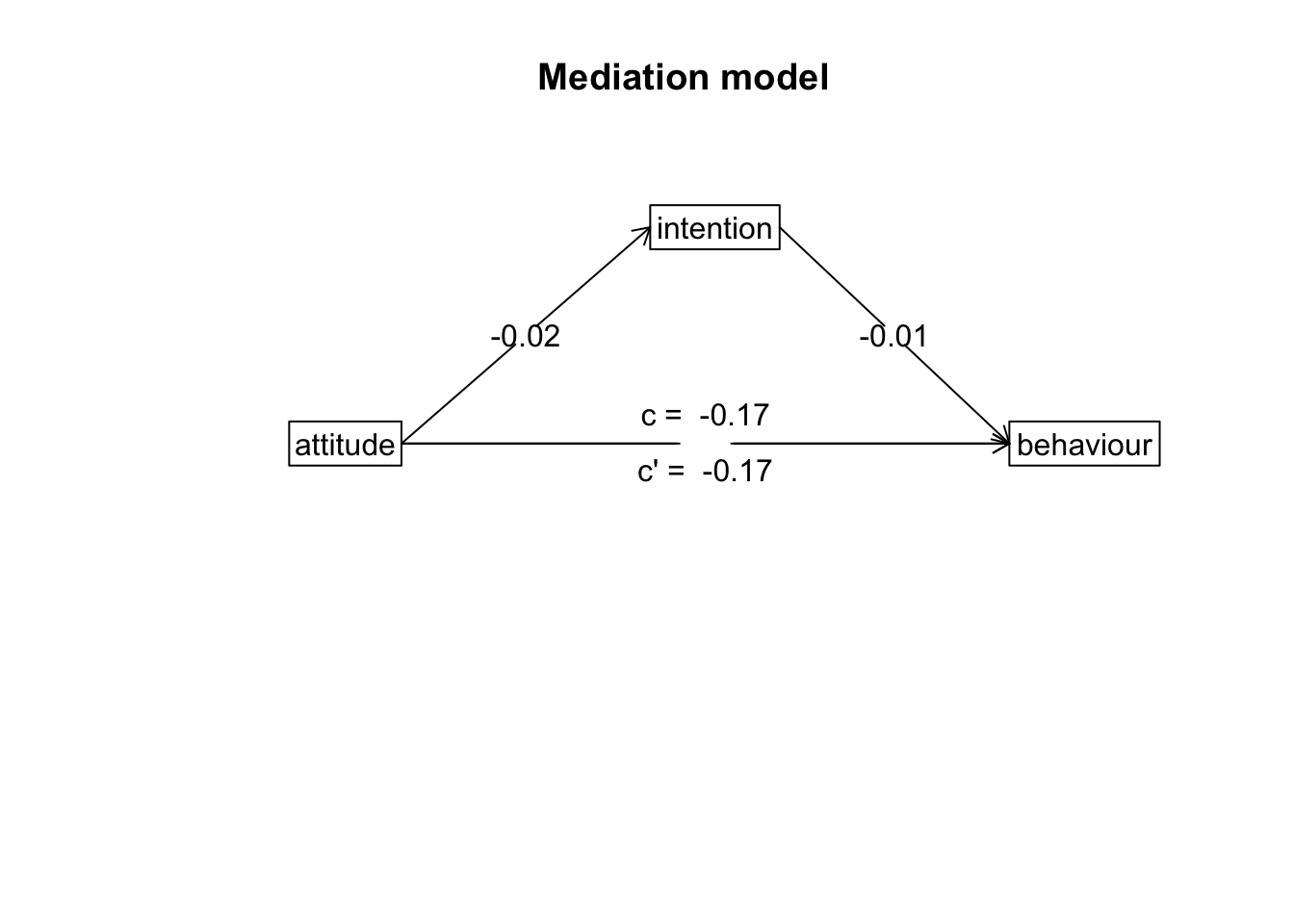

Path Diagram

For mediation, there’s no good way to plot the raw data that visualises the mediation. The most common way to visualise a mediated effect is through a path diagram. You can do this directly in the mediate() function by setting the ‘plot’ argument to TRUE or use the mediate.diagram() like below:

mediate.diagram(model)

4. Write-up analysis.

There are several things you need to include when writing up a mediation analysis. Writing up a mediation analysis includes the write-up for each individual models with and without the mediator (Model 1 and Model 2 above - these numbers are also included in the output for the mediate() function), and also the estimated indirect effect and associated confidence intervals. Usually, you would want to accompany the write-up with a path diagram such as the one above.

In the model where attitudes towards exercise predicted variance in exercise behaviour, the effect of exercise attitudes was not significant (beta = 0.45, p = 0). When including intention to exercise into the model, the effect of attitudes on behaviour did not change (beta = 0.38, p = 0.001). Mediation analysis revealled a non-significant indirect effect of intentions to exercise on the association between attitudes towards exercise and exercise behaviour (mean bootstrapped indirect effect = 0.38, 95% CI = -0.03, 0.2).

Note: While we report the results in text above, it is sometimes also easier to report the separate models in a table.